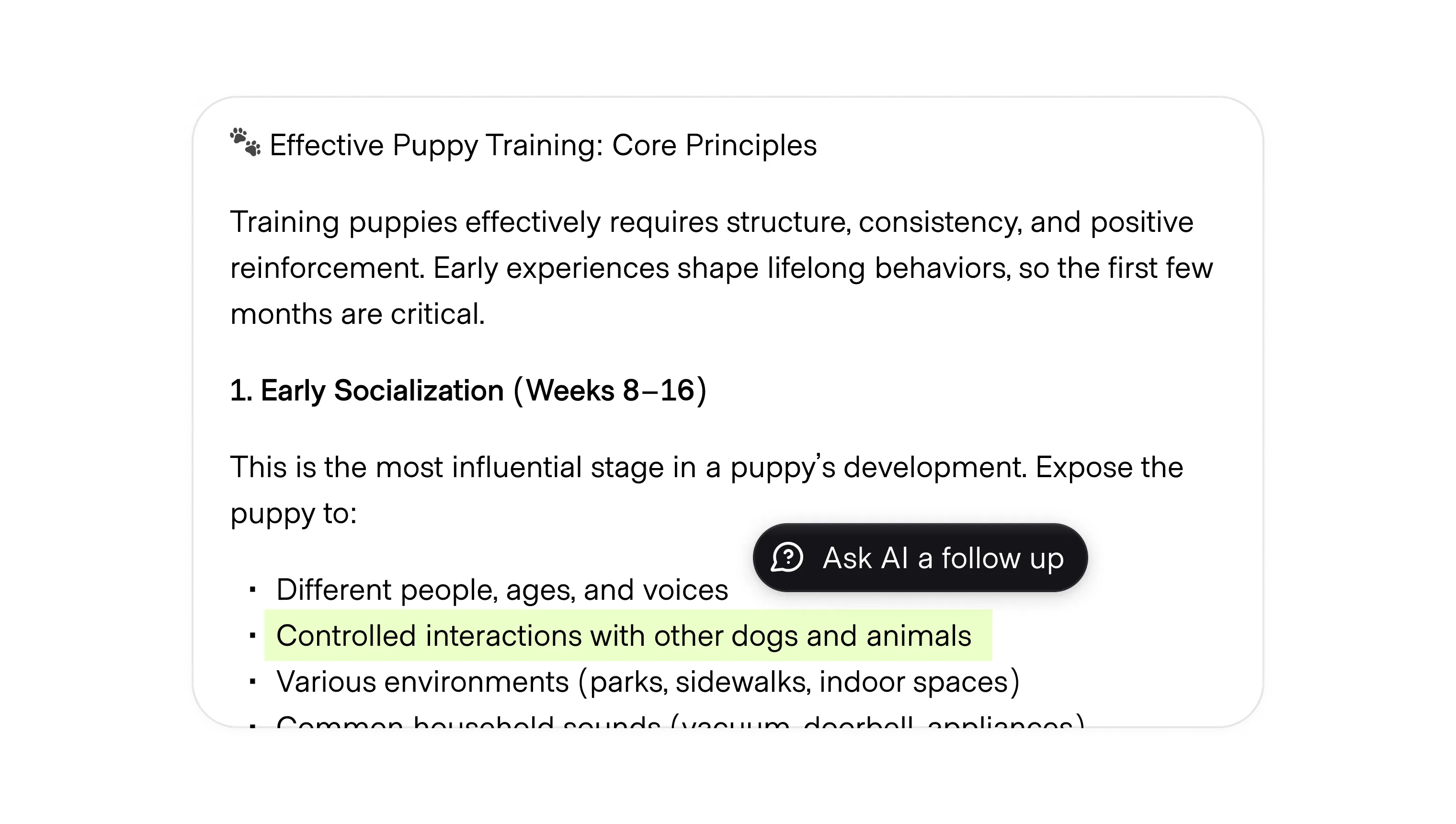

Sometimes a user only wants to adjust or respond to a small part of a larger piece of content or canvas without regenerating the entire response. Inline actions provide a direct way to target specific content, whether by replying, editing, or inserting new material, without breaking the flow of interaction. This gives people more precise control and positions AI as a copilot rather than a system that must always be directed wholesale.

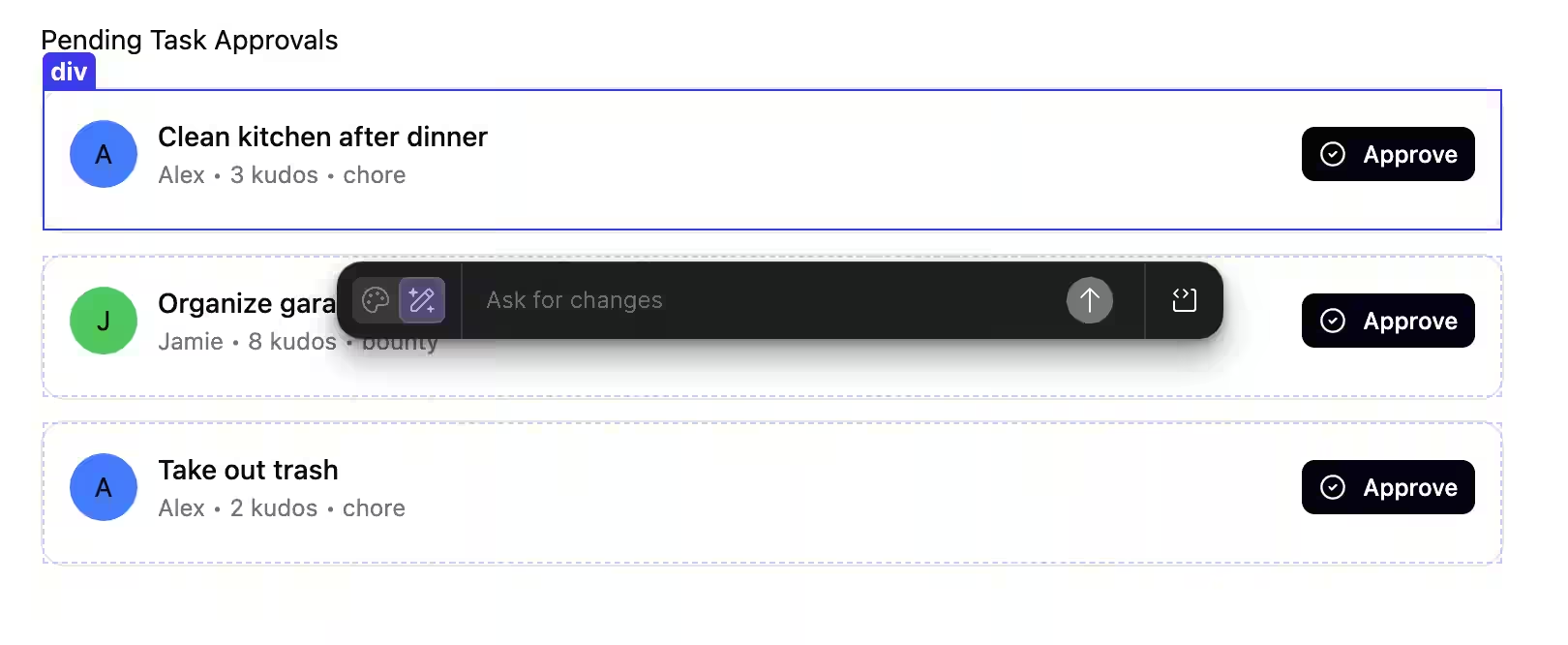

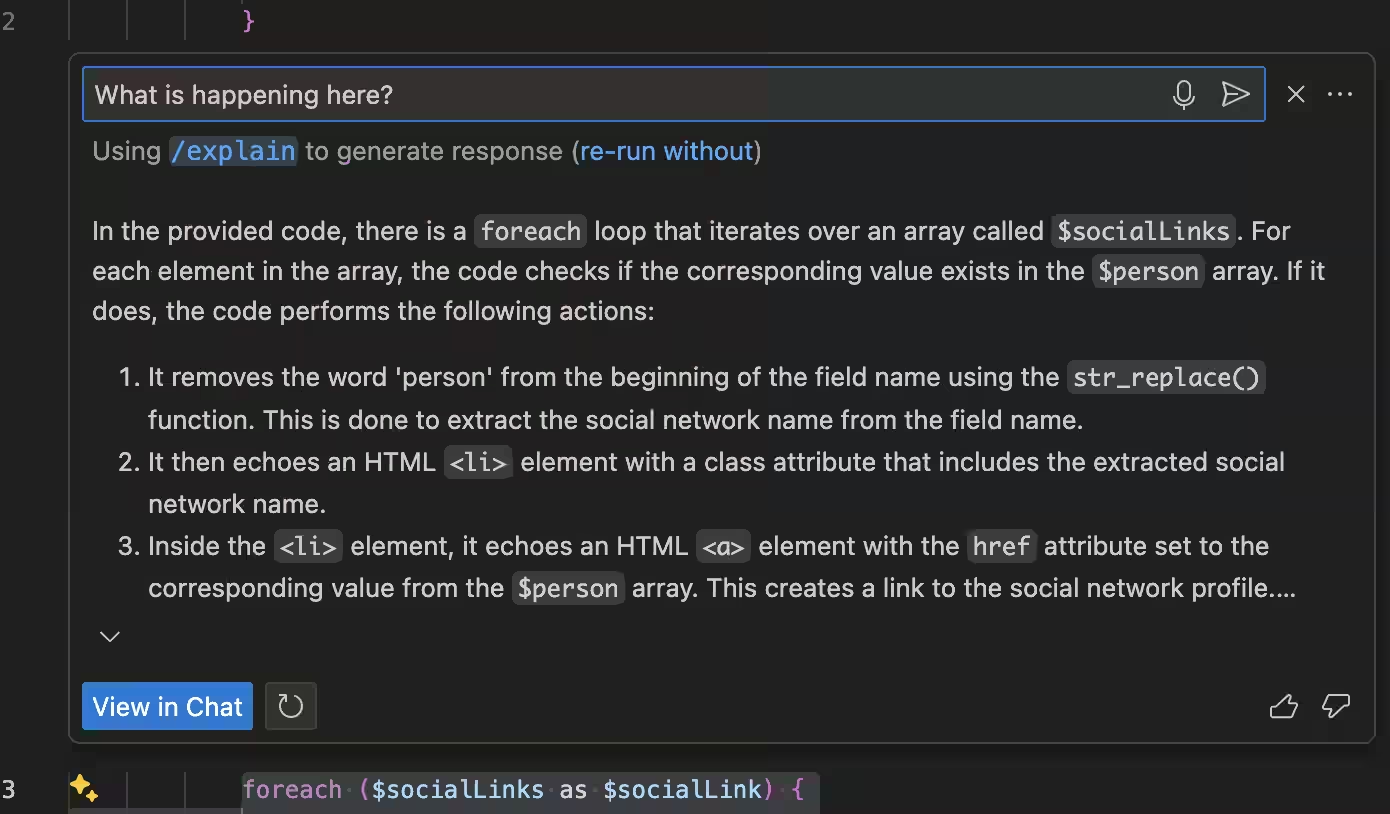

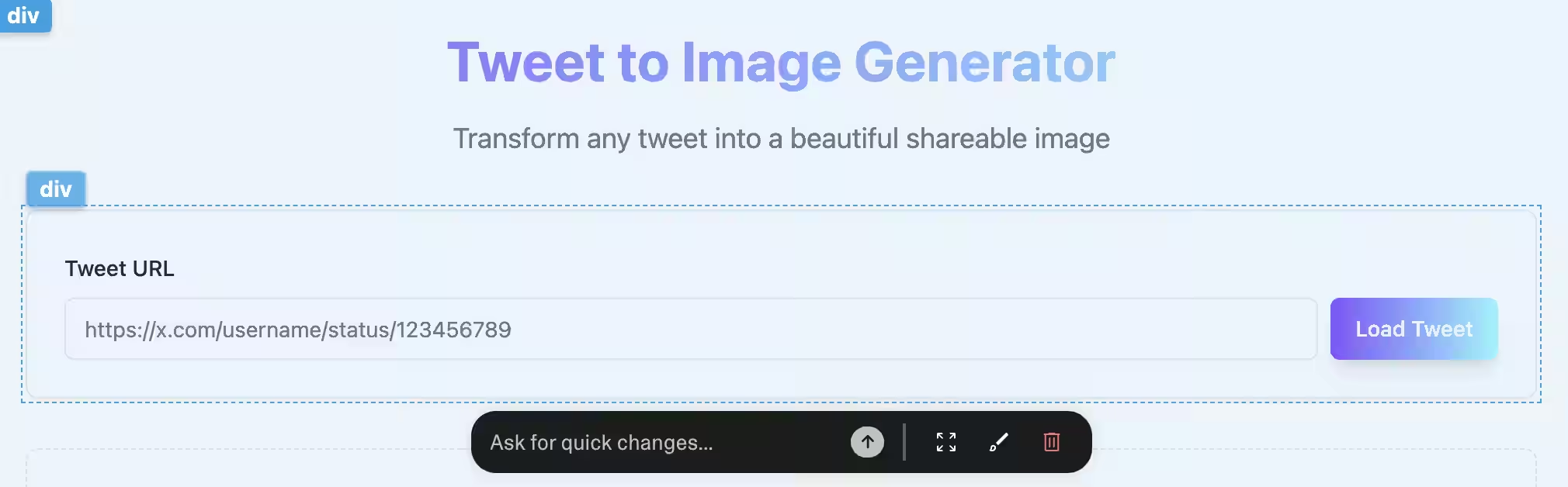

What distinguishes inline actions from similar patterns like inpainting is their opinionated nature. These are preset actions, hard coded or recommended contextually as follow ups, that cover a variety of prompt types:

In essence, these act as shortcuts: a pointer to constrain the AI's focus and an associated prompt to make action easy.

Inline actions are not limited to text. In multimodal systems, targeted prompts can be applied to images, audio, or even live conversations. For example, during the GPT-4o launch demo, a presenter redirected the model mid-interaction by instructing it over video to forget an earlier statement and focus on the current question.

Press play on the video below if the YouTube controls don't appear.

This last example illustrates the real strength of inline actions: when users can fully direct the AI’s attention, they shift from training the system to using it as a tool to accomplish specific goals.