Memory gives AI systems the ability to retain and reuse information across interactions, creating continuity for the user. Instead of starting from scratch in every session, the system can recall prior context, preferences, or facts and apply them to new tasks. This shifts the AI from a transactional tool into a persistent assistant.

A well-designed memory reduces friction. Repeated instructions about tone, format, or context can instead be remembered, closing the loop between user feedback and better results. When users see their input carried forward, they are more likely to invest in longer-term use.

But memory also raises risks. Without clear controls, AI may misremember, overgeneralize, or accumulate irrelevant or incorrect details. This can lead to user frustration or even a form of “AI psychosis,” where the model builds distorted or unstable behaviors from flawed recollections. Unlike ephemeral chat, memory demands mechanisms for review, correction, and deletion.

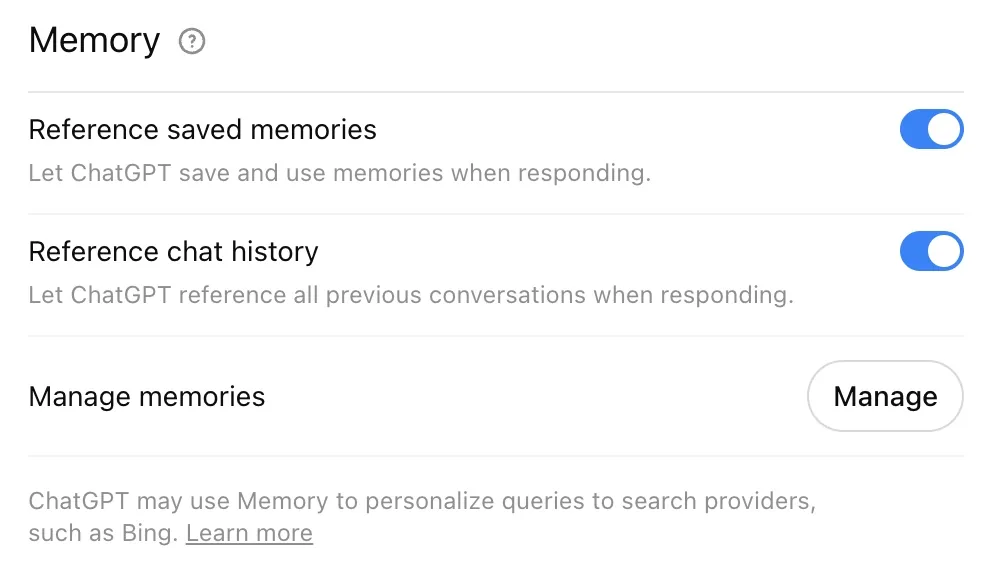

To avoid this, memory must be visible and controllable. The design challenge is balancing continuity with transparency and user agency.

Key dimensions

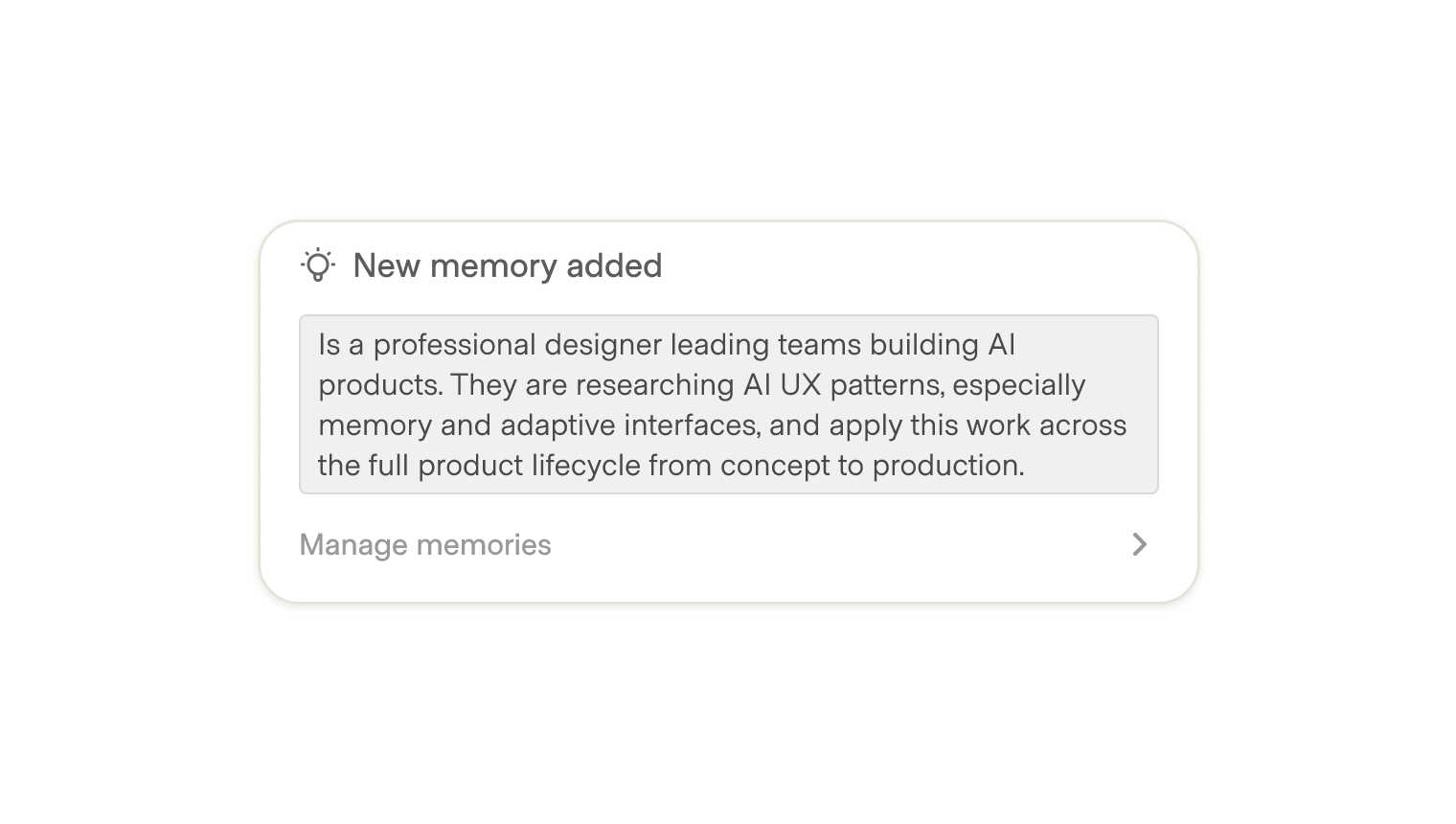

- Knowledge map: Unless in a private or incognito mode, the AI is constantly storing data about activity, preferences, and past interactions. The best implementations surface this information as a knowledge map, showing what has been remembered and how it influences outputs. Without this, memory becomes a black box.

- Scoped memory: Not all memory should be global. Users may prefer information retained only within a project, workspace, or conversation. Scoping to clear boundaries prevents bleed-through (e.g., a personal detail shaping a work document) and makes retention more predictable.

- Editable details: When users can see what the AI has captured, they should be able to curate it. This means selectively editing, removing, or adding details. Strong designs allow both forgetting (to clear incorrect or sensitive information) and explicit saving (to lock in important facts). A living knowledge map evolves to match the user’s intent.

- Clear the cache: Sometimes users need a fresh start. Forcing account deletion or reinstallation is heavy-handed. Instead, provide a “reset memory” option that clears remembered data in one step. Like clearing browser history, it restores trust without requiring users to abandon the tool.

Variations and forms

- Global memory: Information applies across all surfaces and contexts. Works well for persistent preferences like tone or default language. Risks unintended bleed-through. Example: ChatGPT's viewable memory about the user across chats.

- Scoped memory: Information retained within a workspace, project, or conversation. Prevents irrelevant carryover but may reduce convenience. Example: Perplexity's memory within a space.

- Ephemeral memory: Retains context only for the current session, similar to incognito mode. Best for privacy-sensitive scenarios or temporary exploration. Example: Apple’s limited session memory, with selective long-term retention for stored data like names.