Models already rewrite our prompts. Most systems take what you type, adjust it, then send a stronger instruction to the model. A prompt enhancer brings that process into the open. It sits near the input, suggests concrete additions or rewrites, and turns a rough intent into a clear, constrained prompt before anything runs.

Enhancers support a better user experience and more efficient use of compute power:

While parameters tune model behavior after the fact, Prompt enhancers improve the instruction itself up front. Tools that expand, clarify, or score prompt completeness move from passive guidance to active assistance, closing skill gaps for non-experts.

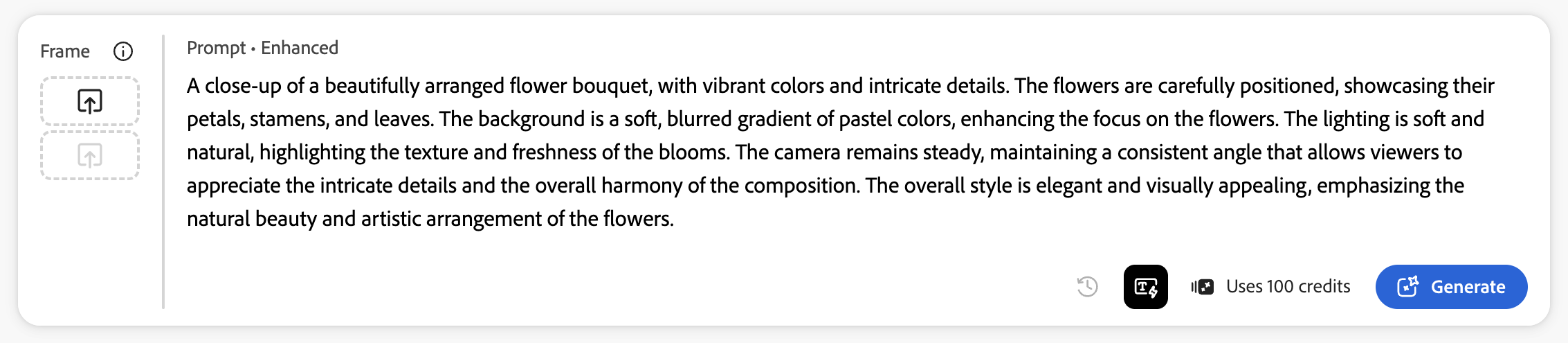

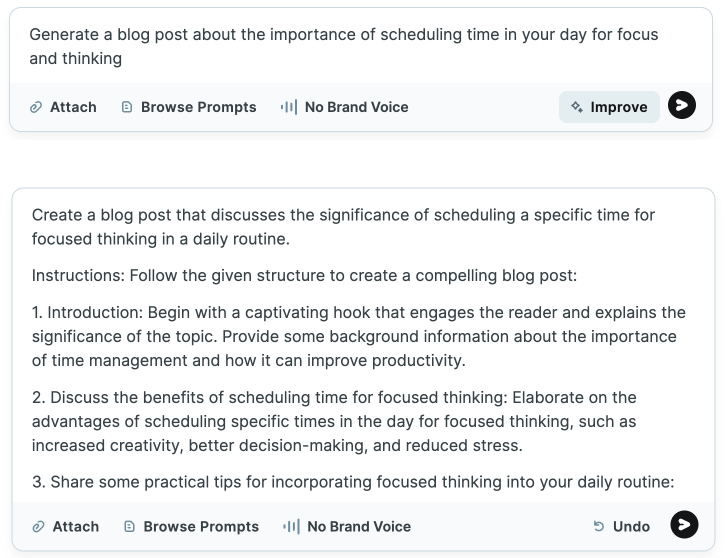

Enhancers show up across modalities. In text tools you see “optimize” or “rewrite” controls in editors and playgrounds. In image and video tools you see style chips, prompt expanders, and reference helpers that add structure behind the scenes while keeping the user’s goal intact.

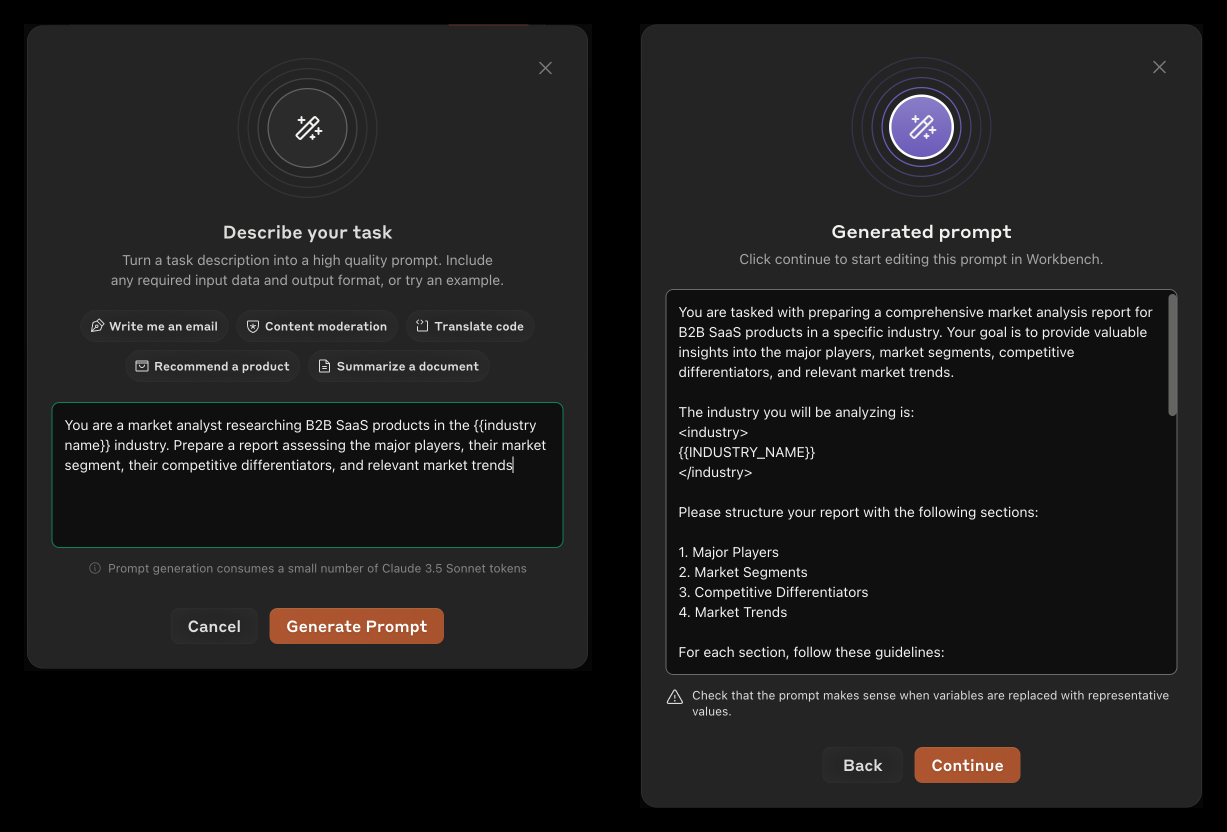

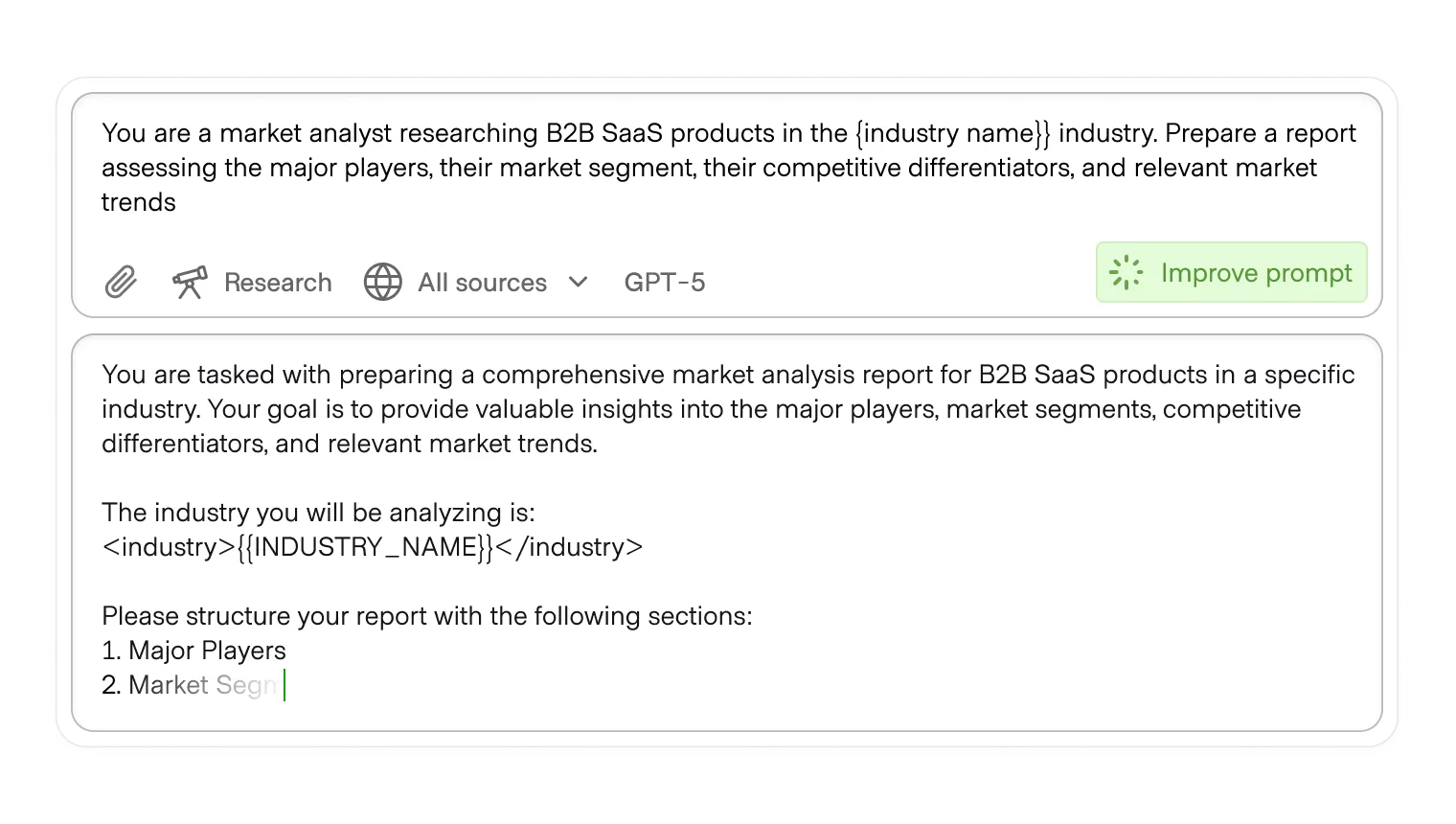

Foundational models and many AI products include a workspace or playground for users to test various prompts against the model. Anthropic includes the option to have AI co-write your prompt with you, taking the user's input and using advanced prompt techniques to include it. In this way, a user can understand how AI processes their prompt into an improved form to logically get the best outcome.