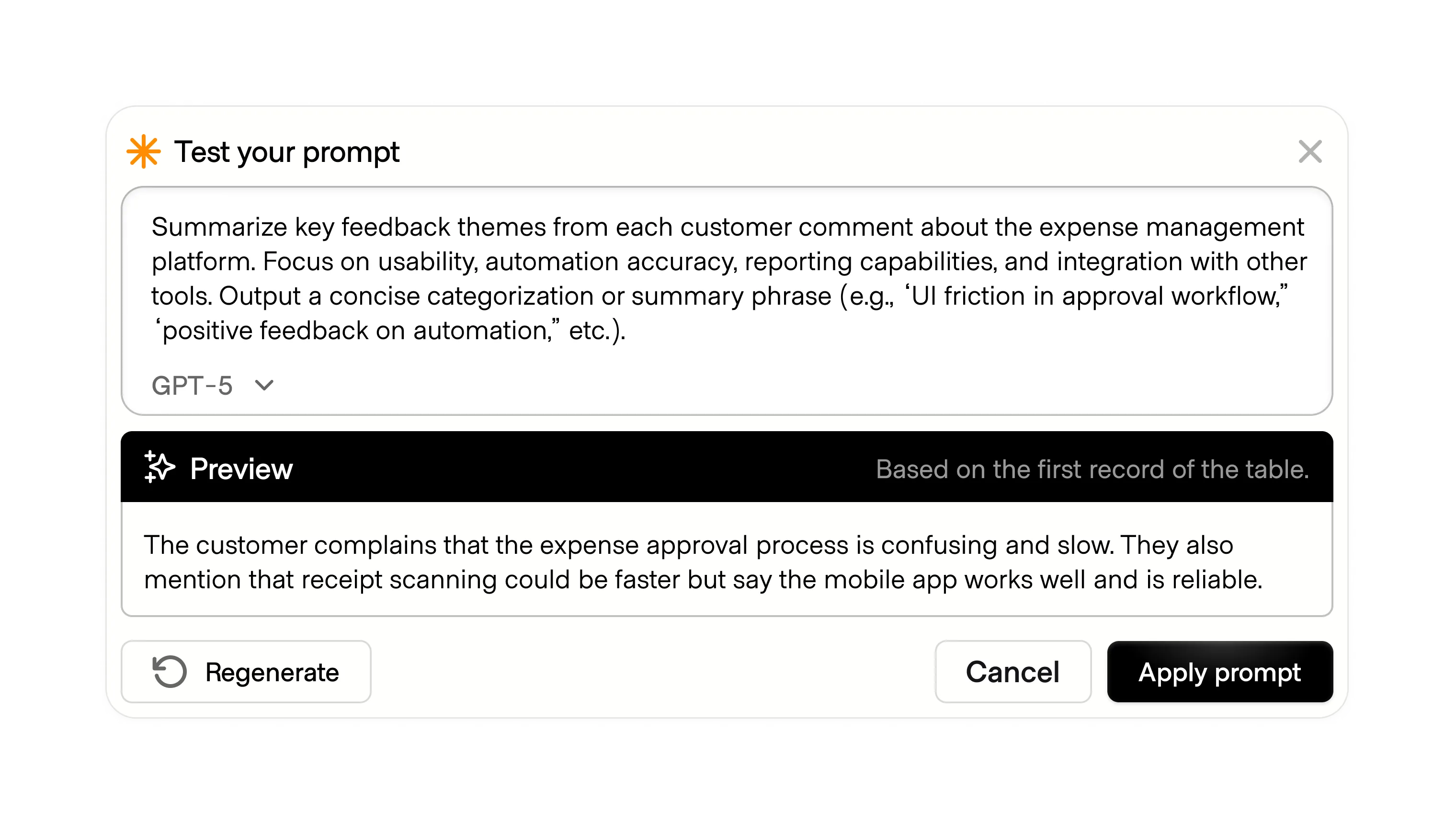

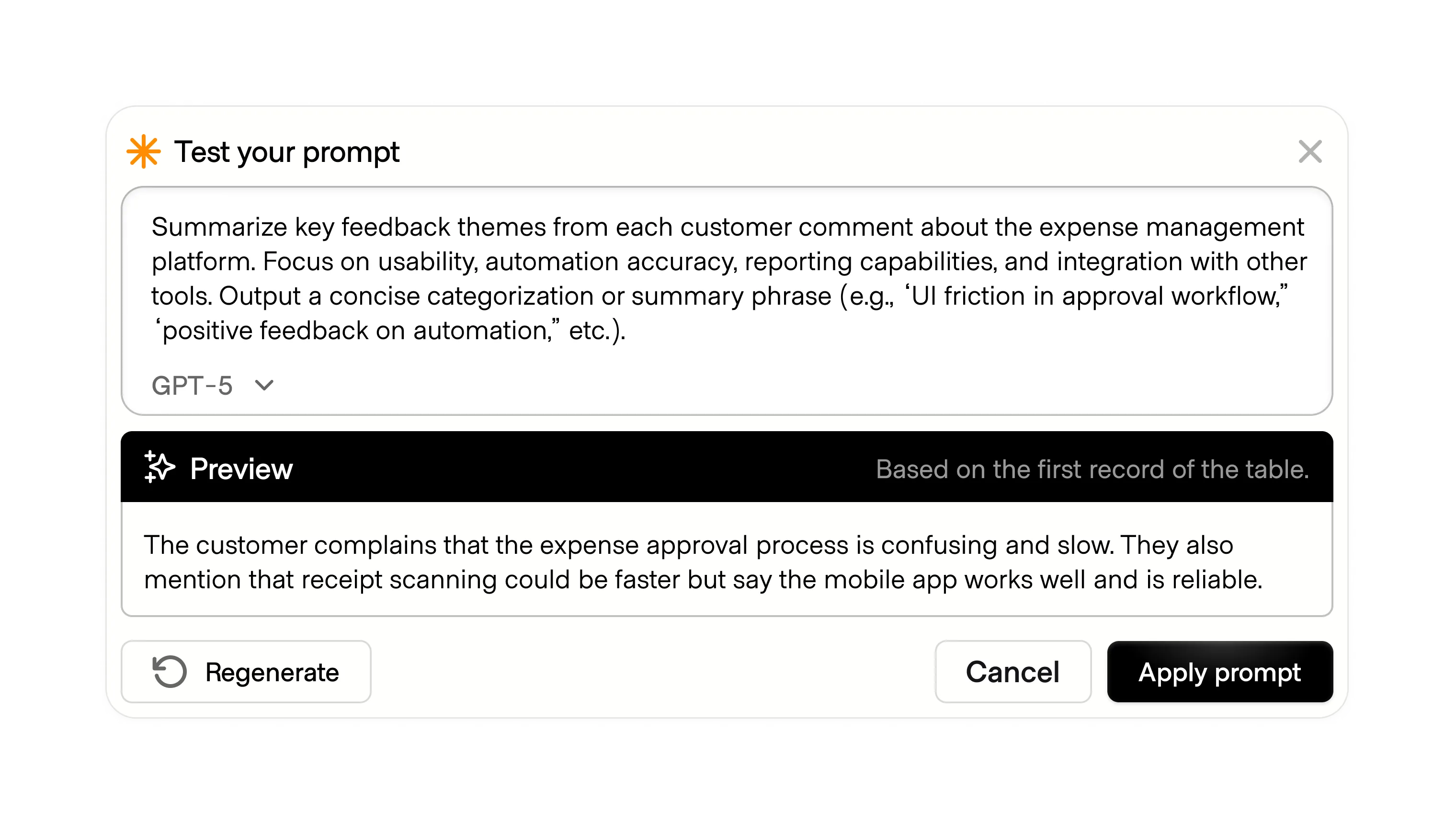

Sample responses are lightweight outputs generated before committing to a full, time-intensive result. They give users a quick preview of what the AI intends to do, letting them confirm direction before resources are consumed or content is overwritten.

This pattern shifts control back to the user. Instead of immediately processing a long draft, large file, or expensive computation, the user sees a short proof of concept. If the sample looks misaligned, they can correct the input early. If it looks right, they can proceed with confidence.

For AI companies, sample responses conserve compute power. They prevent wasted runs that the user would likely regenerate or discard. For users, they reduce frustration by catching format or intent mismatches before they become sunk costs of time and attention.

This approach has precedents outside of AI. Zapier tests workflows with a single record before running them at scale. Spreadsheet macros often run on sample data before applying to the entire sheet. In AI, the distinction lies in the higher cost and unpredictability of generative outputs. By surfacing a controlled sample, the system makes its direction legible and lets the user remain in charge.

Context matters. A sample may be a single row in a table, a 30-second audio clip instead of a full track, or a thumbnail version of an image. In text, it may take the form of a short preview paragraph before a full draft. Each medium requires different balances of fidelity and friction. Too much previewing slows the flow, but the right sample size helps users trust the system without burdening them.