Giving users the ability to train the model on different styles they can recall for use later helps make AI tools stickier and more useful. Users and account managers need tools to product outputs that match their team's brand or taste personal taste without rebuilding prompts each time. Saved styles allow users to reduce manual work while producing reliably similar outputs that match their intent.

Saved styles across modalities

While parameters and training methods vary across content types, saved styles can be useful in all forms:

- Writing styles: Users can define preset writing styles with distinct voice and tone, depth of detail, technicality, and so on. A piece of marketing content written to appeal to hiring managers on LinkedIn and a technical paper for researchers may draw from similar context sources, but need to sound completely different.

- Audio voices: Depending on the context, users may want voices with different pacing, emotional projection, or characteristics like inferred age. Examples include creating different characters for an audio book, or different personas for AI presenters.

- Visual styles: Custom trained styles provide consistent art direction by bundling parameters, references, prompt fragments, and seeds, ensuring reliably similar outputs across multiple runs. These may be remixed for artistic effect.

- Video treatments: Saved detailed like camera, grade, and look cut down on post processing and ensures a consistent look and feel across the film. This application is still emerging.

Creating new styles

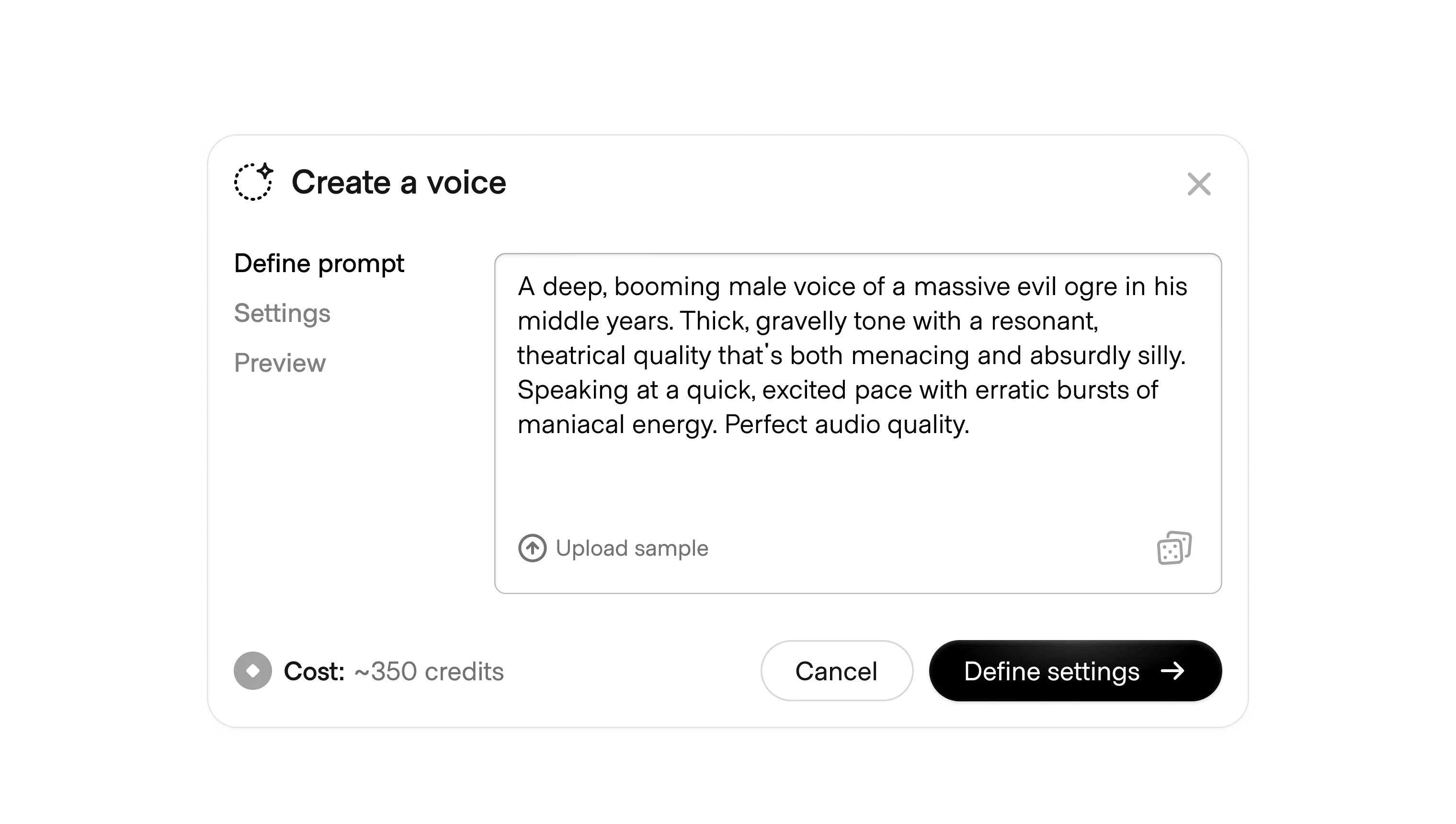

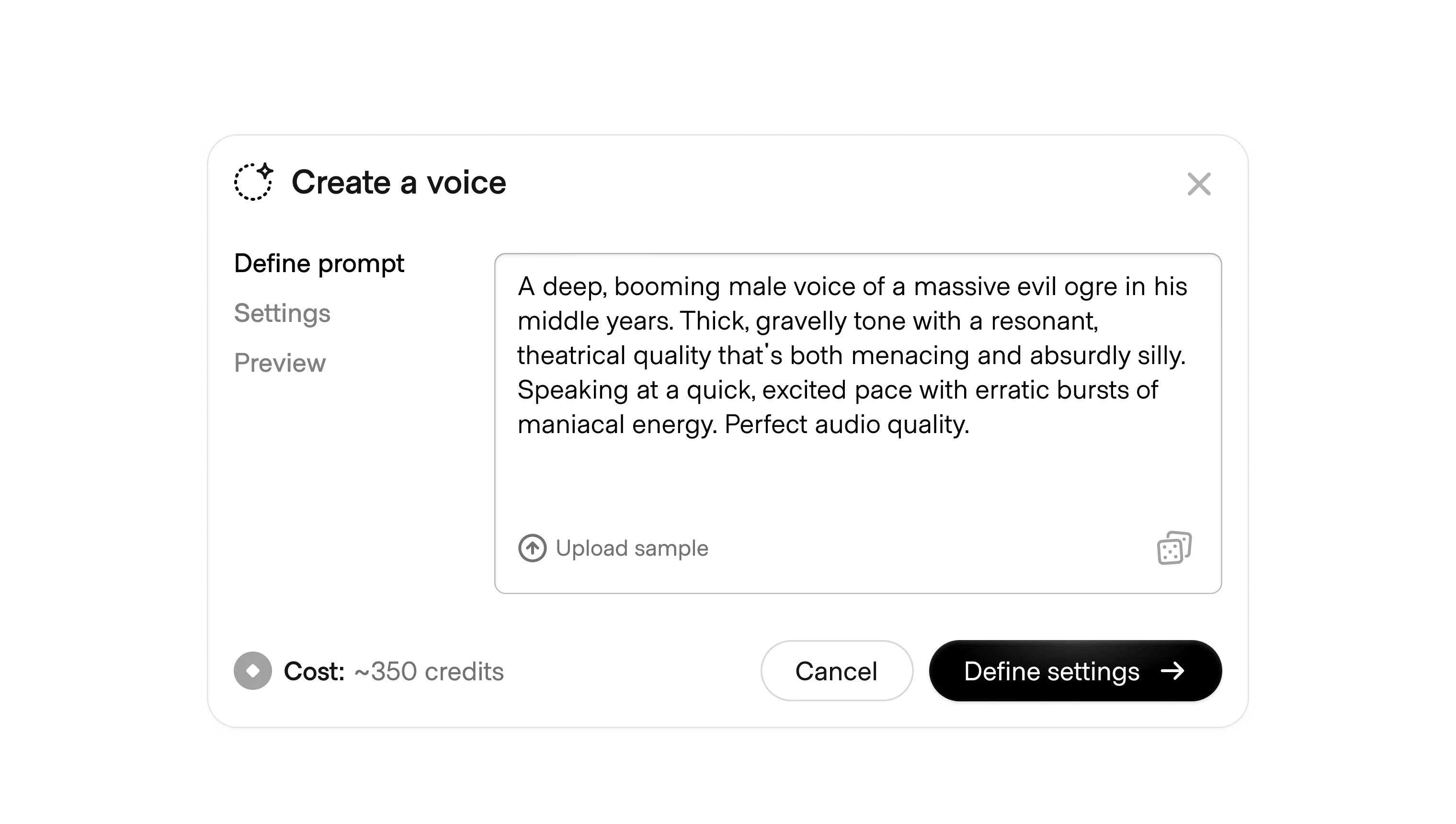

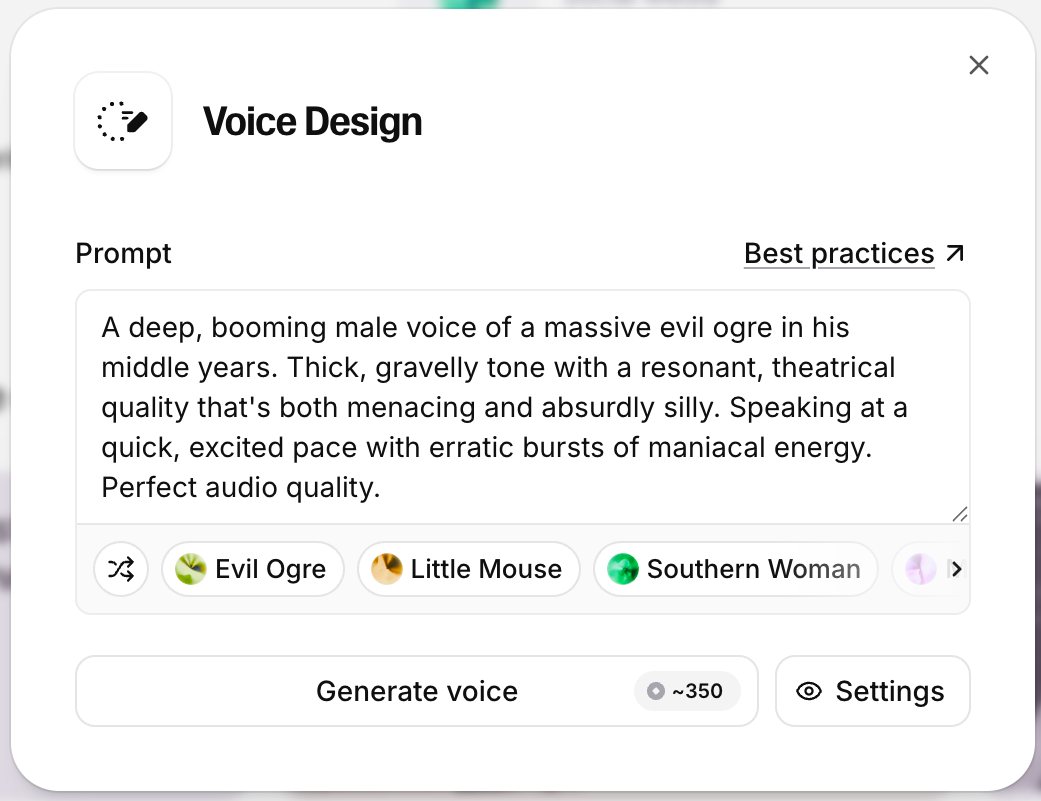

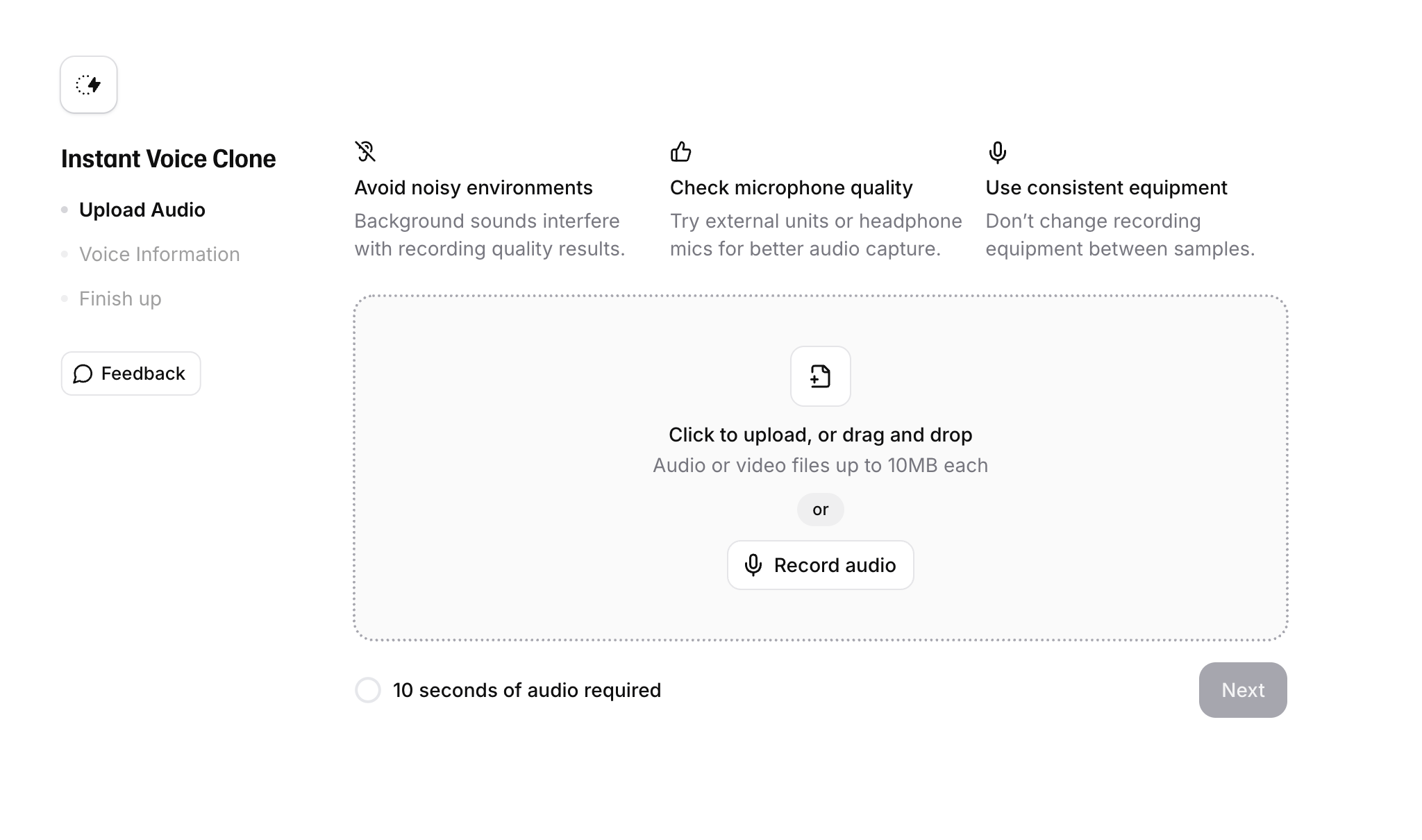

Defining new custom styles may be as simple as using natural language to communicate a general demeanor. More advanced tools can lead to precise controls and more reliable results:

- Contextual attachments like sample images or voices give the AI references to draw from when selecting tokens and weights.

- Negative prompts such as words to avoid or tokens to demote serve as controls for unknown applications and compliant behavior.

- Setting safe or known words and tokens, such as specific pronunciations of proper nouns or consistent character visuals ensure consistency across multiple runs.

- Set parameters for form and structure like emotion or vibe, technical depth, composition, pacing, etc. This ensures details across multiple generative compositions remain intact.

- General prompts to guide token production of aesthetic details, levels of detail and realism, etc can be precise and detailed or general. Ensure the prompt is visible and editable.

Additionally, users may wish to set the temperature of the style when in use, choosing to restrain the model to a strict interpretation and usage, or allow the model to reference the style but veer off into randomized seeds as well

Applying custom styles

Many products come with a style gallery for users to choose from for pre-set options. It's common for saved styles to be added to this gallery at the account or user level for users to apply when needed.

For more advanced users, custom LoRA models can be created to extend saved styles beyond prompt-level preferences. LoRA models take a saved style and turn it into something the AI actually learns, embedding tone, aesthetic, or structure directly into its behavior. This allows teams to produce consistently on-brand or personalized outputs across text, image, audio, and video without rebuilding or re-prompting each time.

Whether managed through individual options or a full model, saved styles operate as an extension of a brand system, creating a shared language teams can use across workstreams.