Connectors establish links between an AI and external systems of record, authorizing the product or agent to read and act on data from tools like Drive, Slack, Jira, calendars, CRMs, and internal wikis.

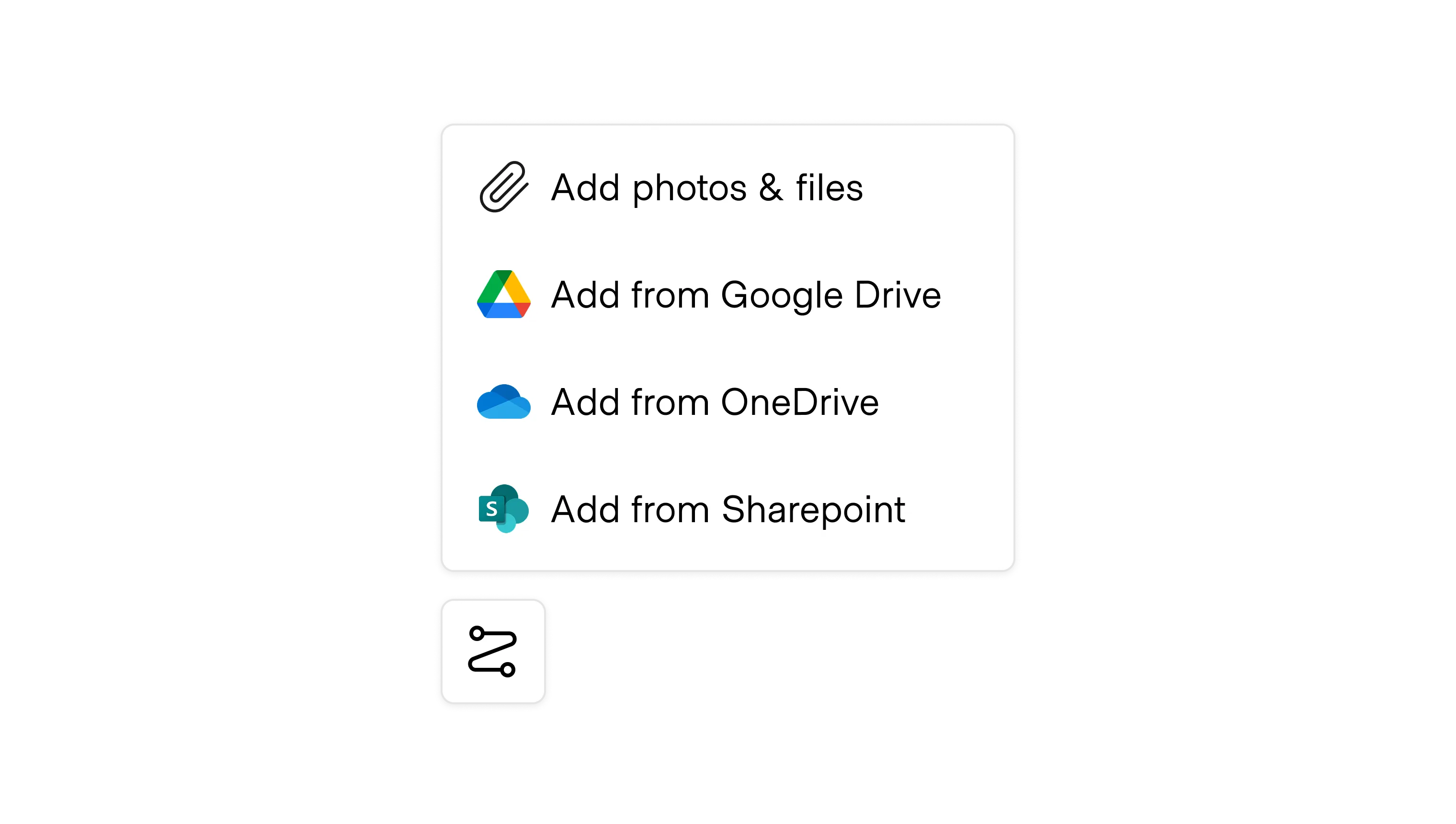

In chat and other open text surfaces, connectors let people ask natural questions and get grounded answers from their own files, messages, and records. Connectors also power background actions like joining meetings, filing tickets, or drafting emails from context.

A well-designed connector makes its scope explicit: what sources are linked, what permissions apply, and whether the AI is retrieving content or making changes. A poor connector hides this, producing answers without showing which system they came from, or allowing actions without confirmation.

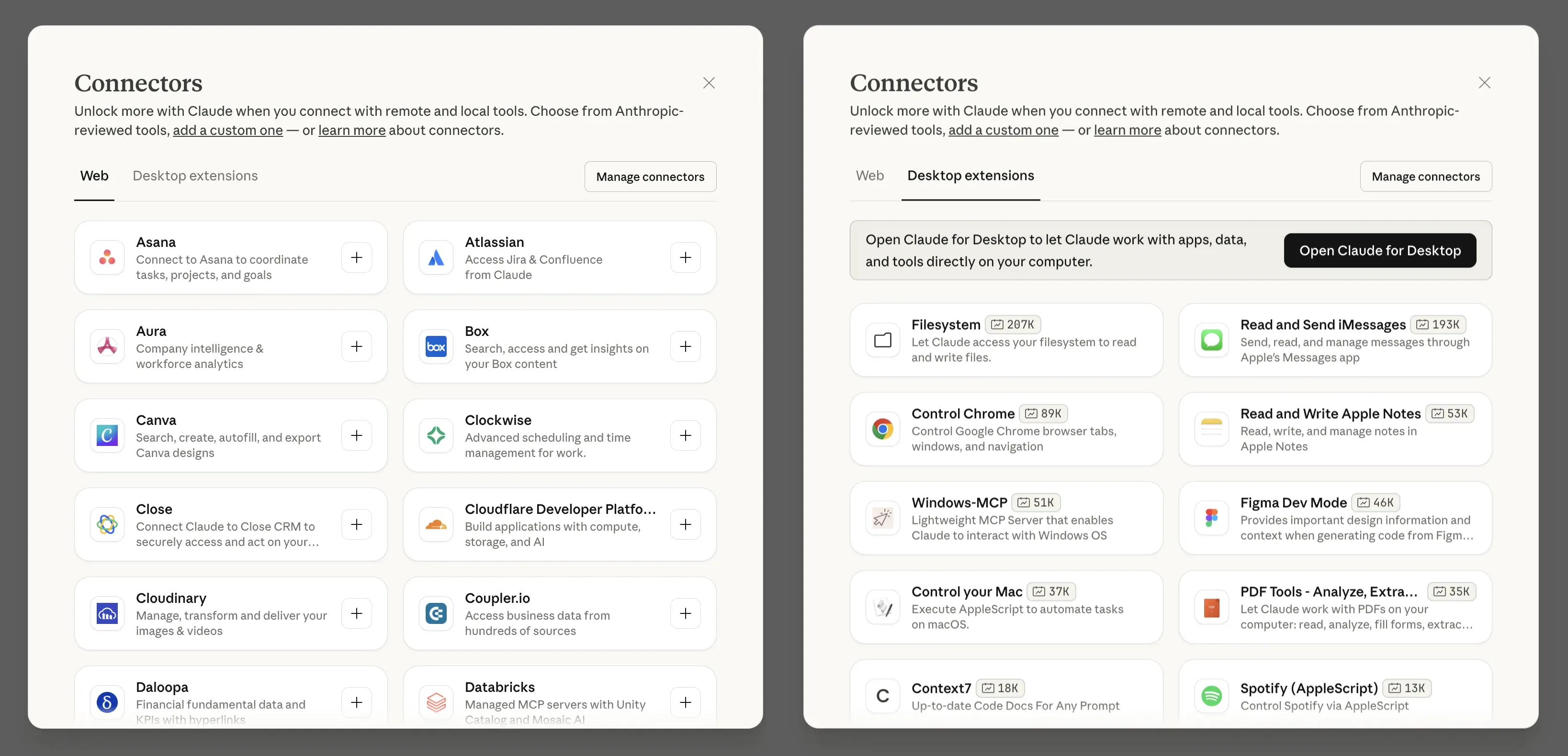

Across products, the pattern shows up in three places:

Connecting AI to real data means it will read content that may include hidden instructions. Malicious instructions in calendar invites, emails, docs, tickets, and wiki pages can all try to steer the model. If those instructions trigger tools through a connector, the AI can exfiltrate data or make unintended changes. Be intentional with your design to secure your experience:

The familiarity and ease of use that AI relies upon can cause users to let down their guard. Be mindful that designing a secure experience is necessary to gaining continuous access to the user’s context over time.