Citations give users a way to see where an AI’s response comes from. They connect a generated output back to its underlying material, whether that’s a PDF, a transcript, a web page, or an internal database. Their role is to create transparency and help users verify information.

Citations also influence the user's perception of AI as an authority, lending credibility to the summarized content. This can backfire, since summaries are susceptible to hallucinations or misrepresentations.

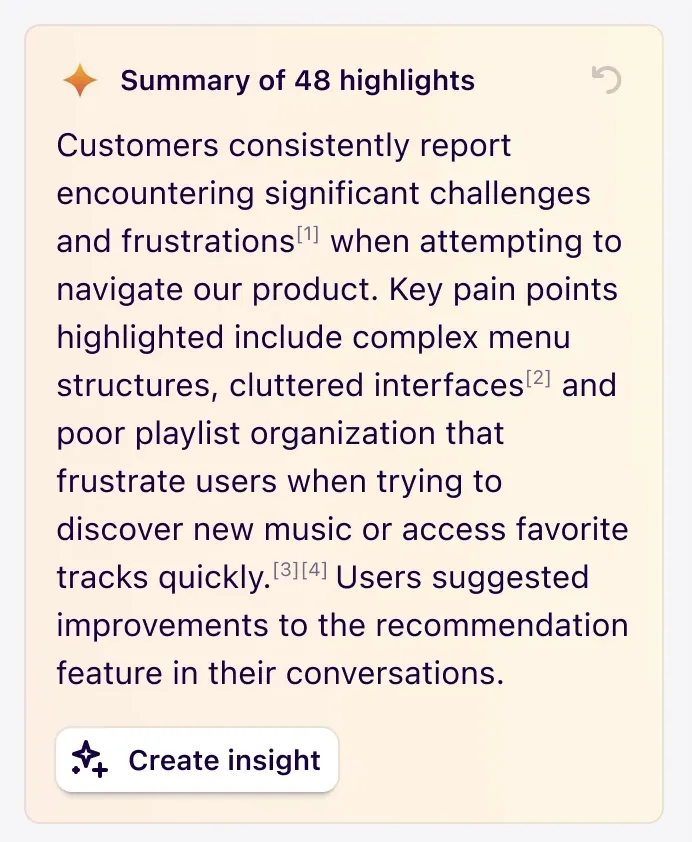

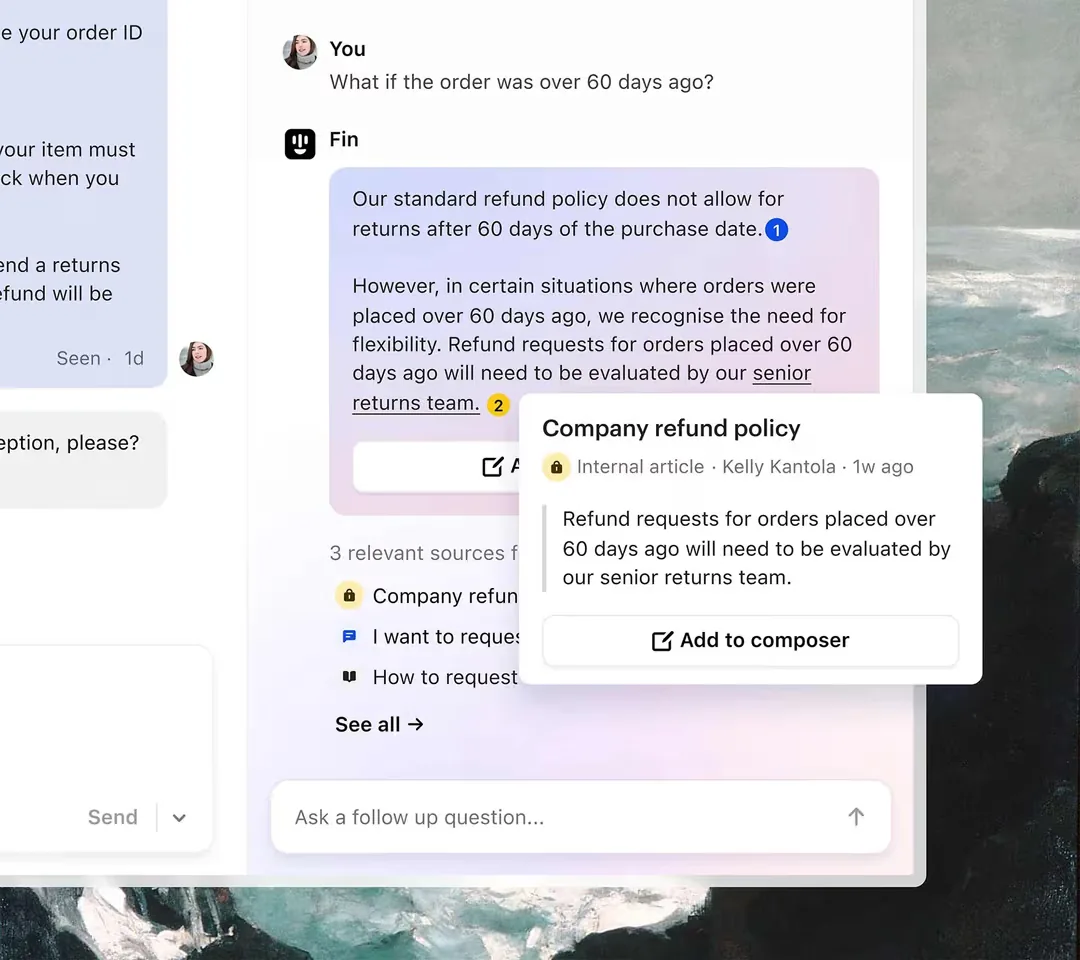

Encourage users to dig into cited content by providing metadata and summaries of the referenced material, which also helps weed out hallucinated sources. To counteract the AI drawing erroneous facts and conclusions from the sourced material, deep link to the relevant part of the source or pull quotes up into the summary, such as when hovering on a citation.

Each variation comes with tradeoffs. Inline highlights and direct quotations are useful to verify specific information, but can prematurely converge on a single source or concept when drawing from a broader reference set. Multi-source references and lightweight links are useful to lead users deeper into a topic or large set of content, but are less reliable as the source material is more likely to be external and unverified.