Every generation costs money to run, and supporting iterative prompting or complicated chained-runs or workflows can quickly add up. Most products pay these costs behind the scenes and roll them into metered usage, governed by credits that refresh regularly or can be purchased in bulk. This guards users from the minutiae of managing individual token counts, but also has the effect of turning AI costs into a black box.

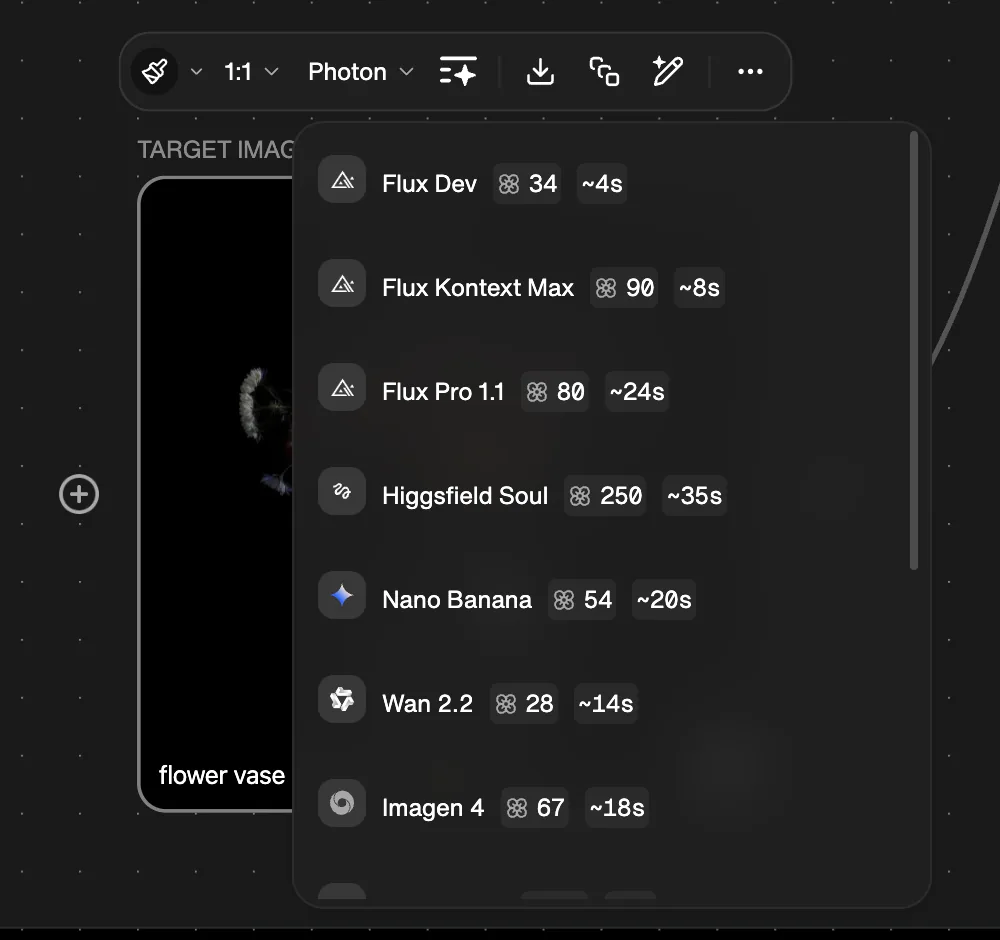

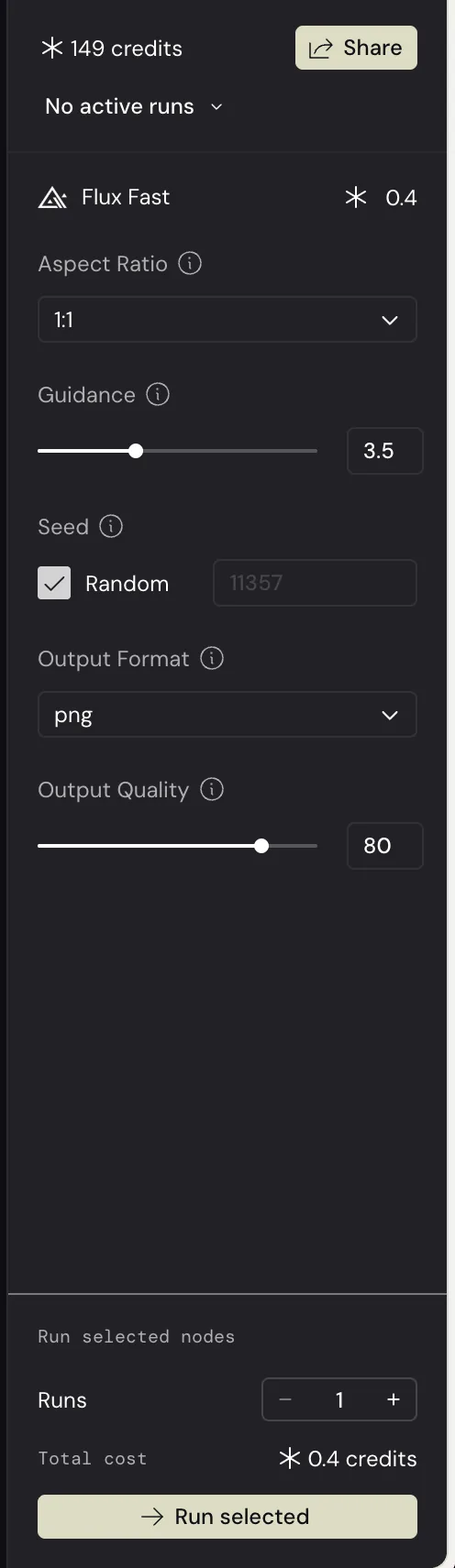

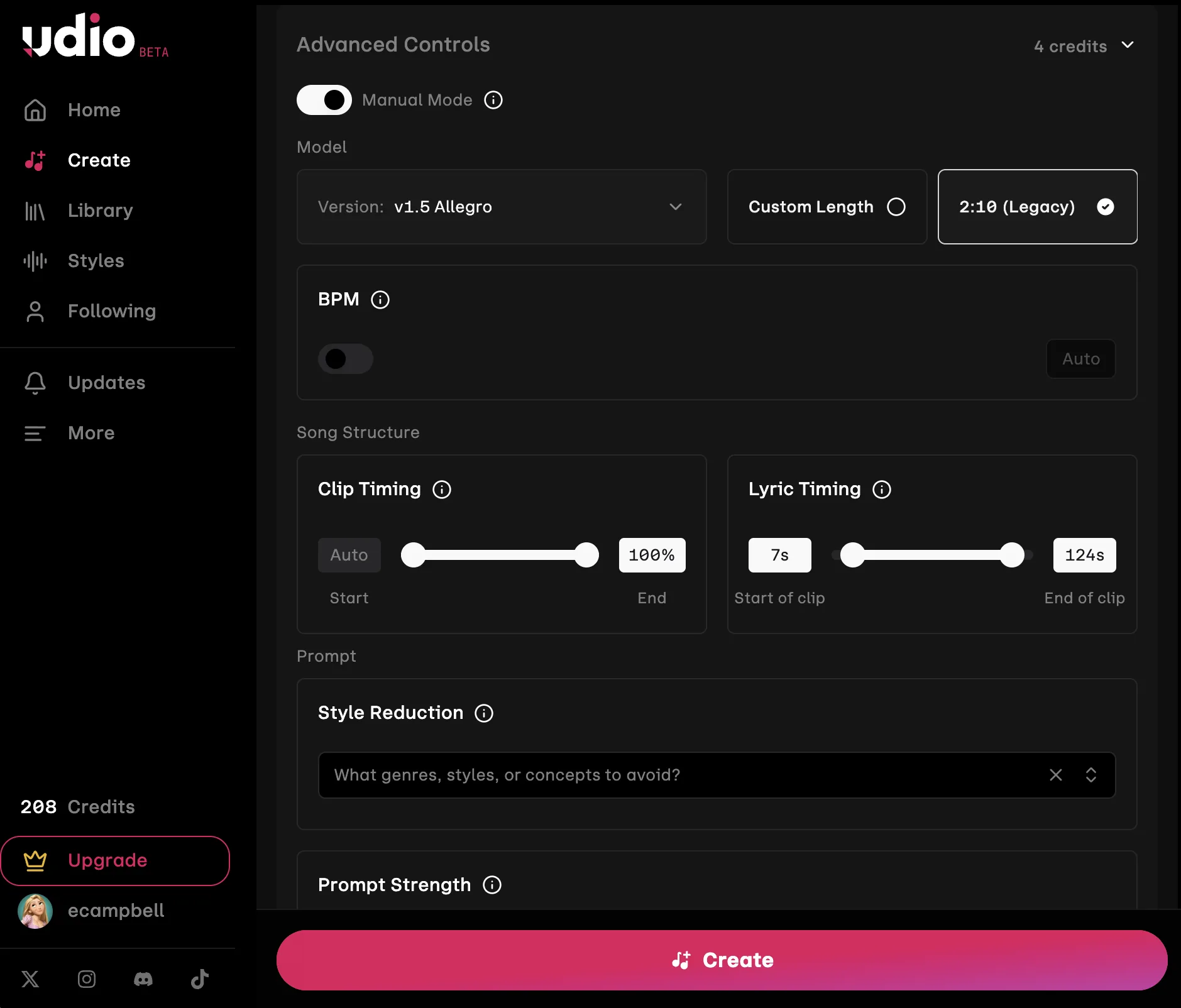

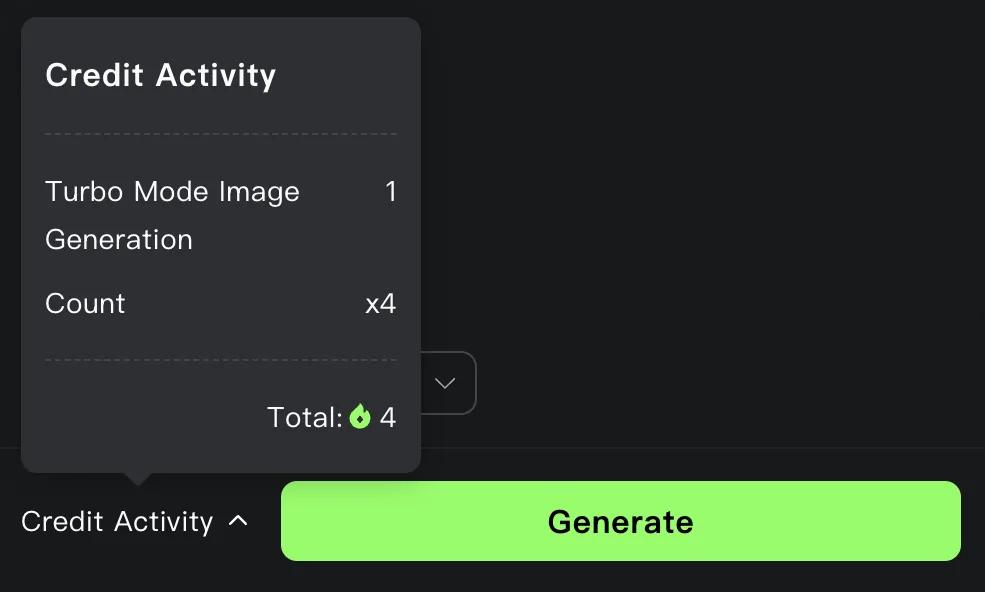

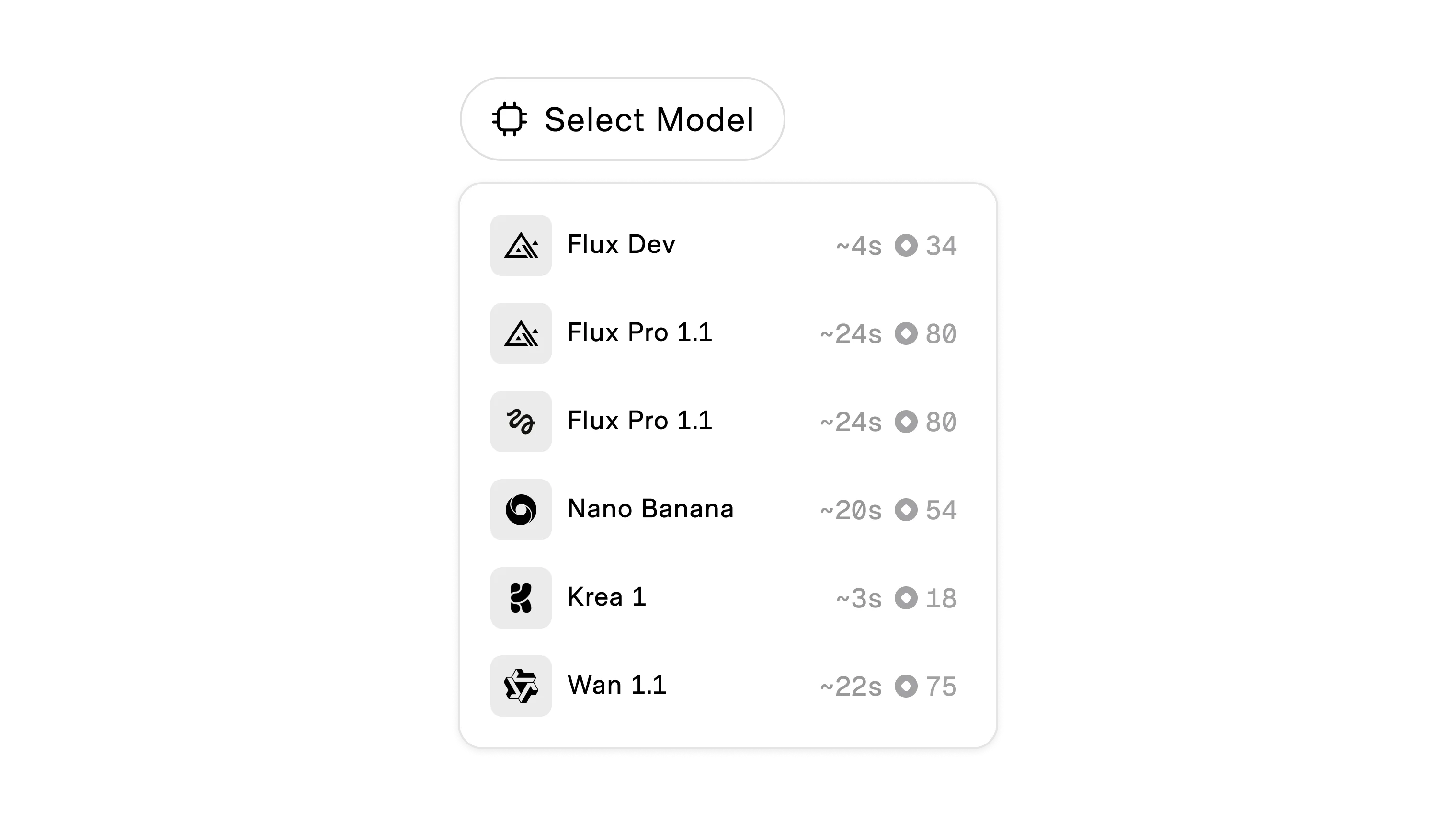

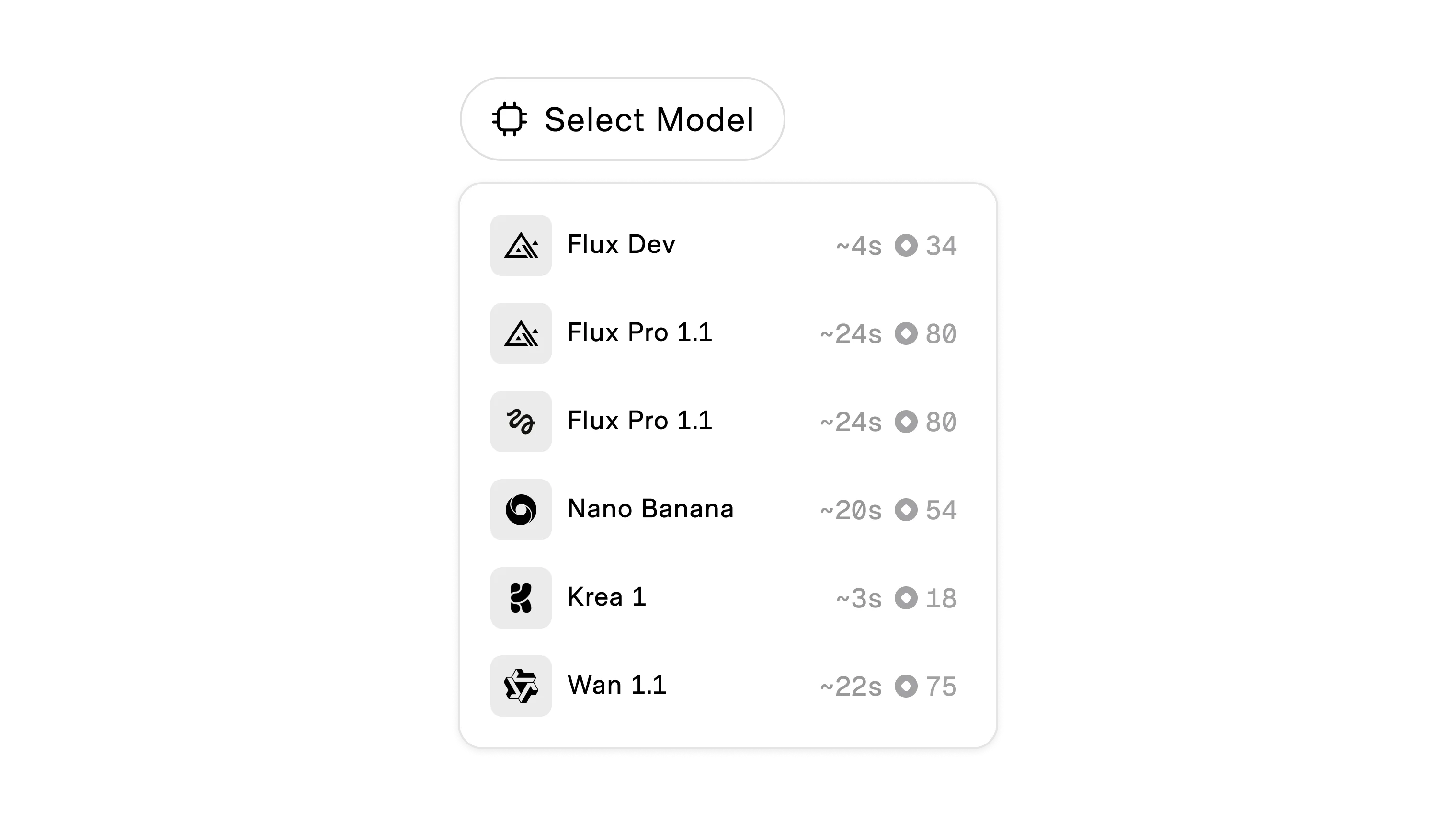

Transparent spend lifts the veil, helping users understand the relative costs of different prompt, parameter, and model combinations before anything runs. Estimated costs are shown beside key actions, and users can see in real-time how changing their selection adjusts what they will "pay" in equivilent credits.

In AI playgrounds and development environments, credits may be priced in local currency to normalize the cost to a common denominator. This is helpful for technical users, but non-technical users are likely to be confused when seeing a dollar amount on top of the cost of their subscription.

Non-technical users benefit from a clear product-based credit system. However, the tradeoff to using product-specific currency is that there is no standard. Users working across multiple products will not have a consistent way of comparing the relative cost of different iterations of inputs and parameters. This standard may evolve over time but it currently creates usability risks to be aware of.