Chained actions connect multiple inputs in a single thread of work. This can support convergent workflows, aiming to reproduce similar outputs on demand, or divergent workflows, supporting creative exploration or comparison.

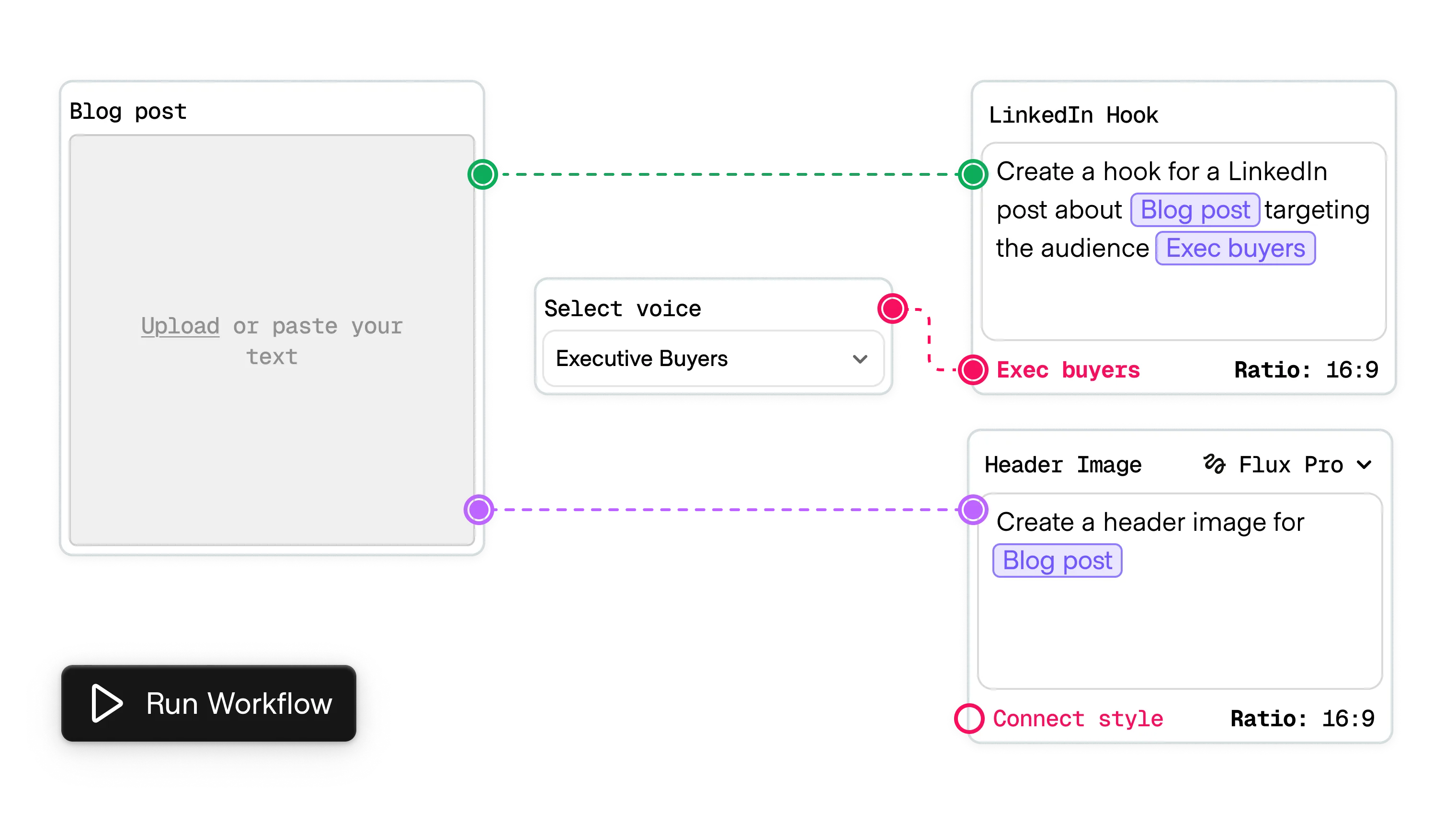

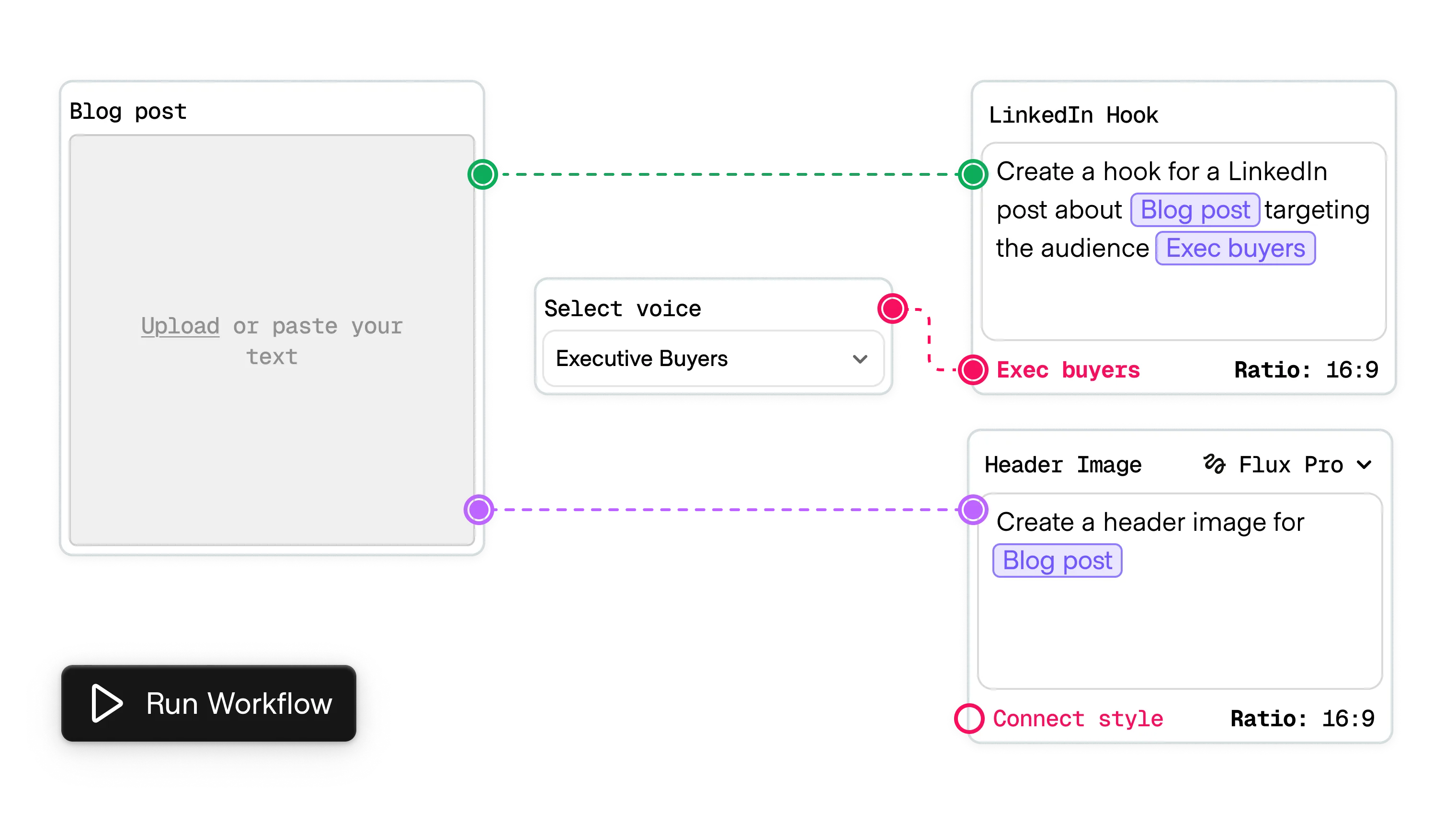

In this multi-step action, prompts, parameters, and tools live as nodes on an infinite board and connect through their edges, like a flow diagram. Users can map a run ahead of time, branch on any step, and see how each decision influences downstream results.

This maintains legibility and oversight. A chain that would vanish into a chat log becomes a visual map that supports side-by-side comparison, reuse, and deliberate forks.

Use this when the task benefits from structured work with multiple inputs or outputs.

The most common application of chained actions is in traditional workflows that integrate AI steps. In this context, chained actions can be configured to incorporate information from data sources or user inputs as variables into AI-powered steps.

Workflows can be constructed out of AI-enabled steps alone, but there is no requirement that they only occur in AI-native tools. Examples include Zapier, Gumloop, and other popular workflow tools.

Some AI-native workflow tools are designed to make generative iteration more manageable by allowing multiple variations across chained prompts to process simultaneously on the canvas.

Branches can be used to create variants, inputs can be remixed with slight differences to evaluate similar prompts for quality, and users can move seamlessly between modalities without losing sight of the underlying prompt details. Examples include FloraFauna and Weavy.

Visual workflows help manage the flow of information across complicated agentive jobs, serving as both a plan of action and an obervation dashboard when the agents are live. By visually tracking work in progress, users can follow the agent's logic and actions and intervene where necessary.

Agentic workflows allow users to gate information and access controls to specific subflows and tune model selections and parameter temperatures to the task, providing maximum user control.