AI is operating increasingly independently through workflows, operator mode, and agents. With that autonomy comes moments where the wrong decision can have negative consequences, ranging from lost data to unexpected costs to leaked personal information.

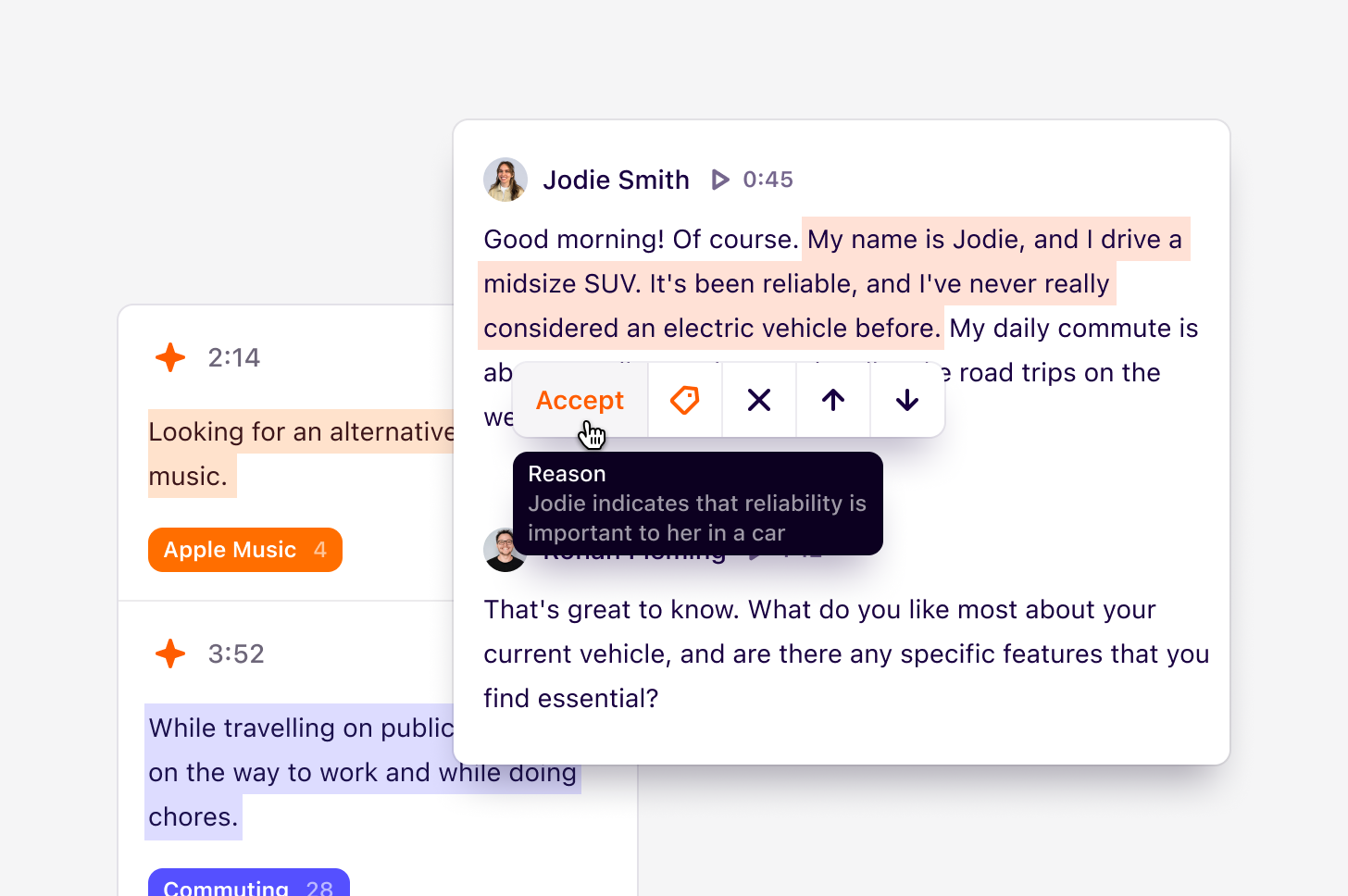

In any situation where the AI is taking action on the user's behalf, it may be appropriate to first have it receive verification from the human orchestrating it.

Verification isn't required for simple tasks with low risk. In these cases, the impact of a poor choice is negligible, while the negative impact of increased friction and wasted time is high. For example, running a search, subscribing to a newsletter, or drafting an email. Repeated actions after an initial verification may also fit in this category, such as an auto-fill action after the sample response is verified.

This equation changes when an errant action has real impact:

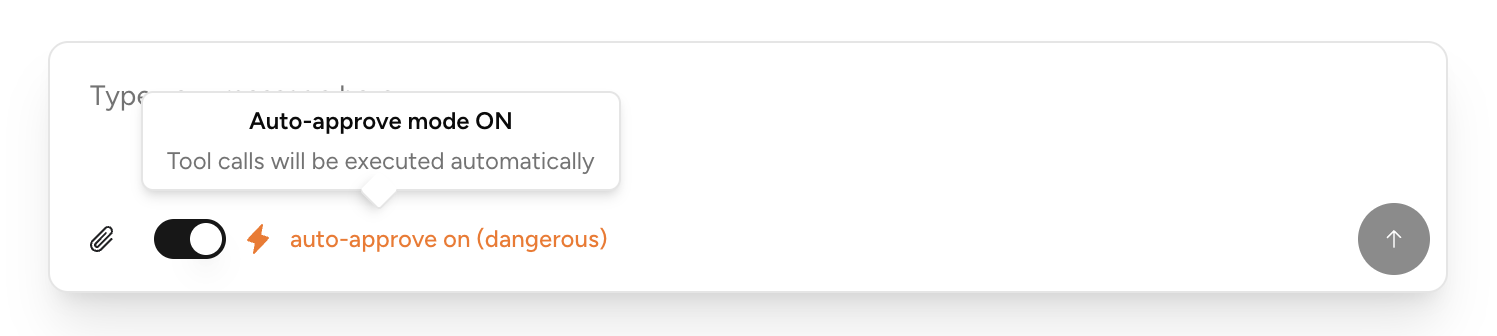

In these circumstances, verifications should be required by default, though users may choose to skip them through settings or instructions.

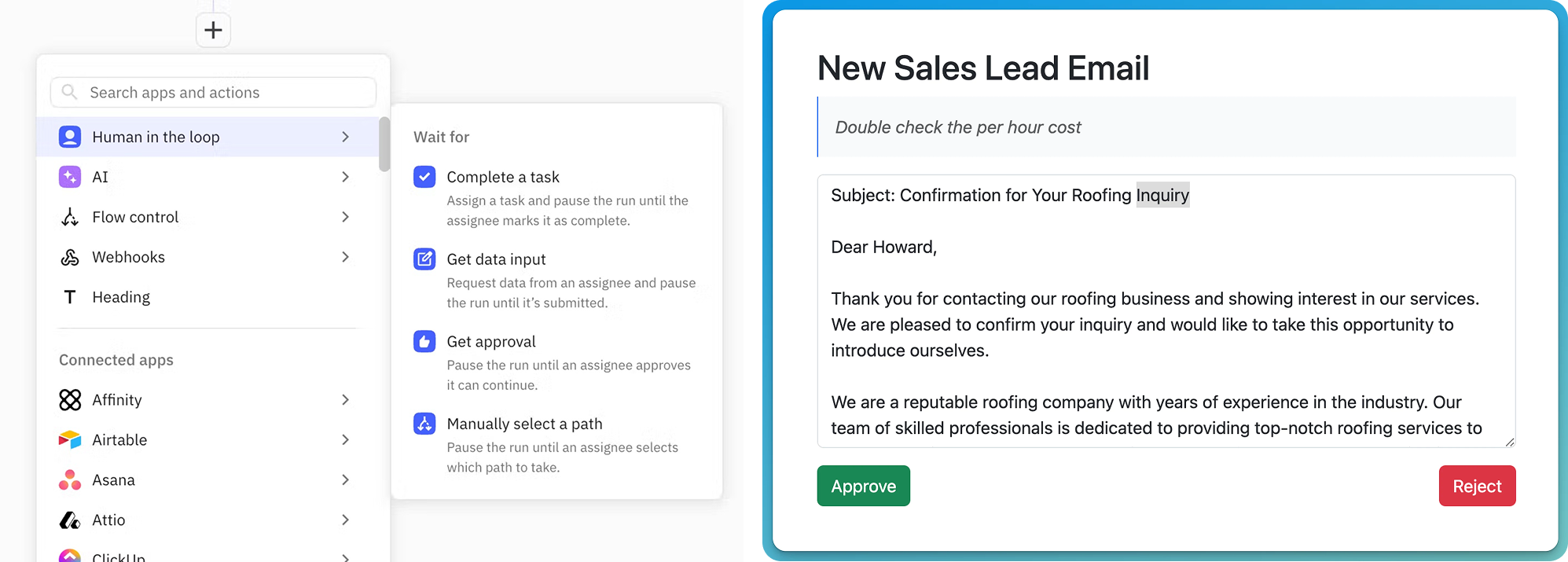

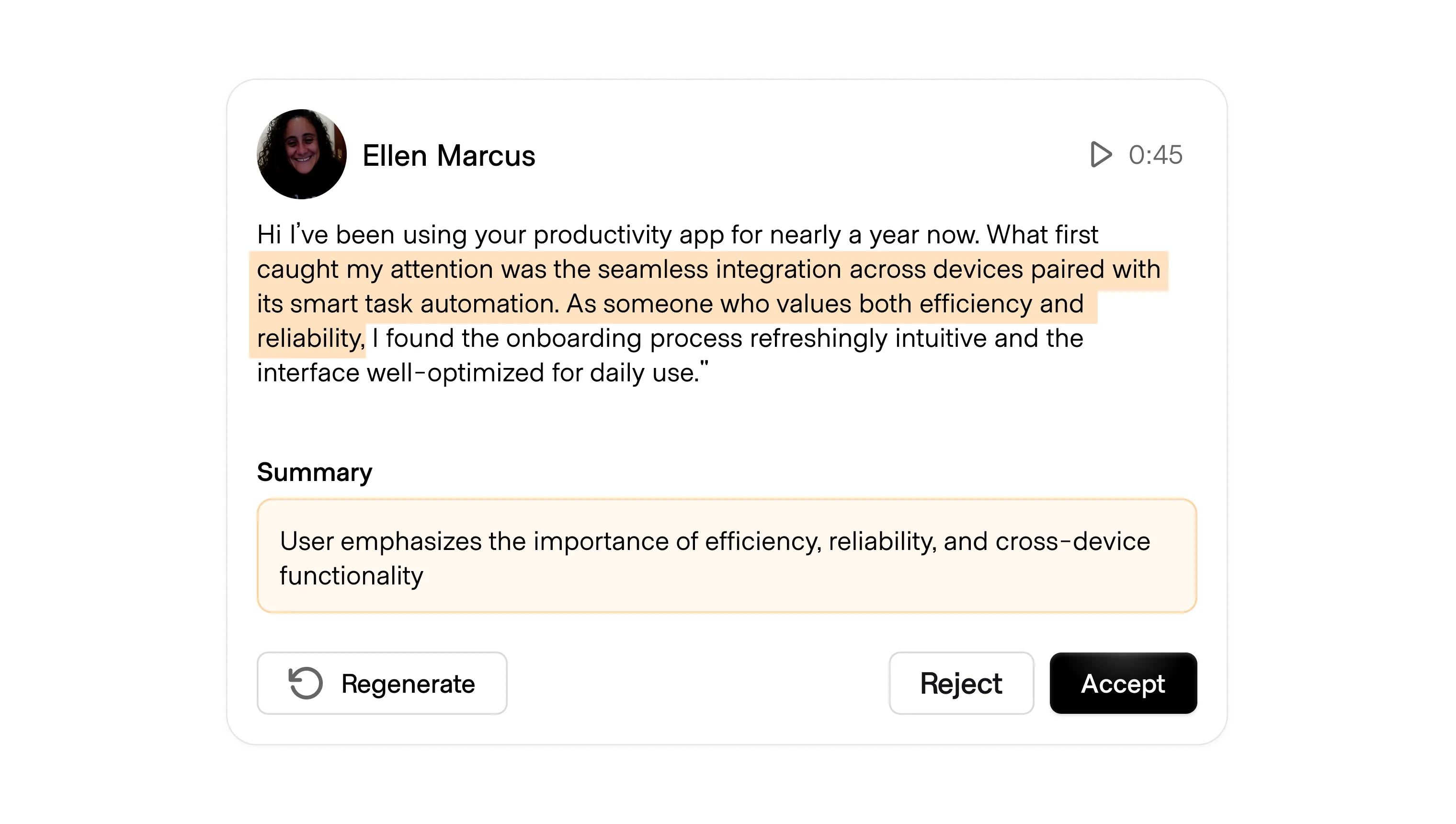

A basic "go/no-go" decision pattern is common already in workflows and other repetitive actions where an erroneous prompt could inadvertently overwrite data or result in unexpectedly high compute costs. Examples include verifying an AI's action plan or a sample response before proceeding with the full action. These should be lightweight interactions, and can occur at the source or pushed to another tool. For example, many AI-powered workflow tools use connectors to send a verification to Slack, Email, SMS, or other common workspaces.

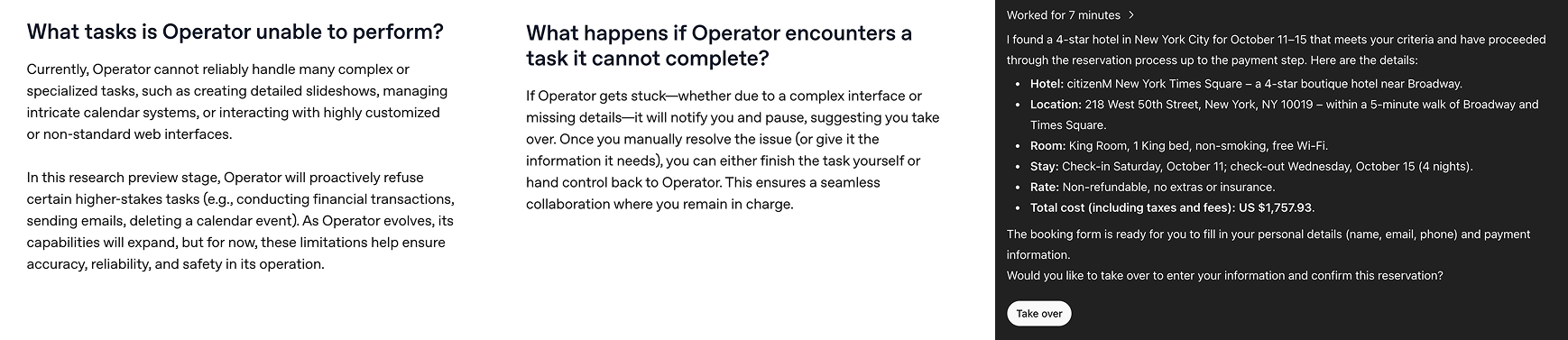

OpenAI demonstrates how it found this balance for its operator mode, which browses the web and takes actions autonomously while the user observes through shared vision and monitoring the AI's stream of thought. The AI is explicitly instructed to halt action when payment data or other sensitive information might be shared. The company hints that they may relax this setting at a later time and grant the user permission to override this constraint.

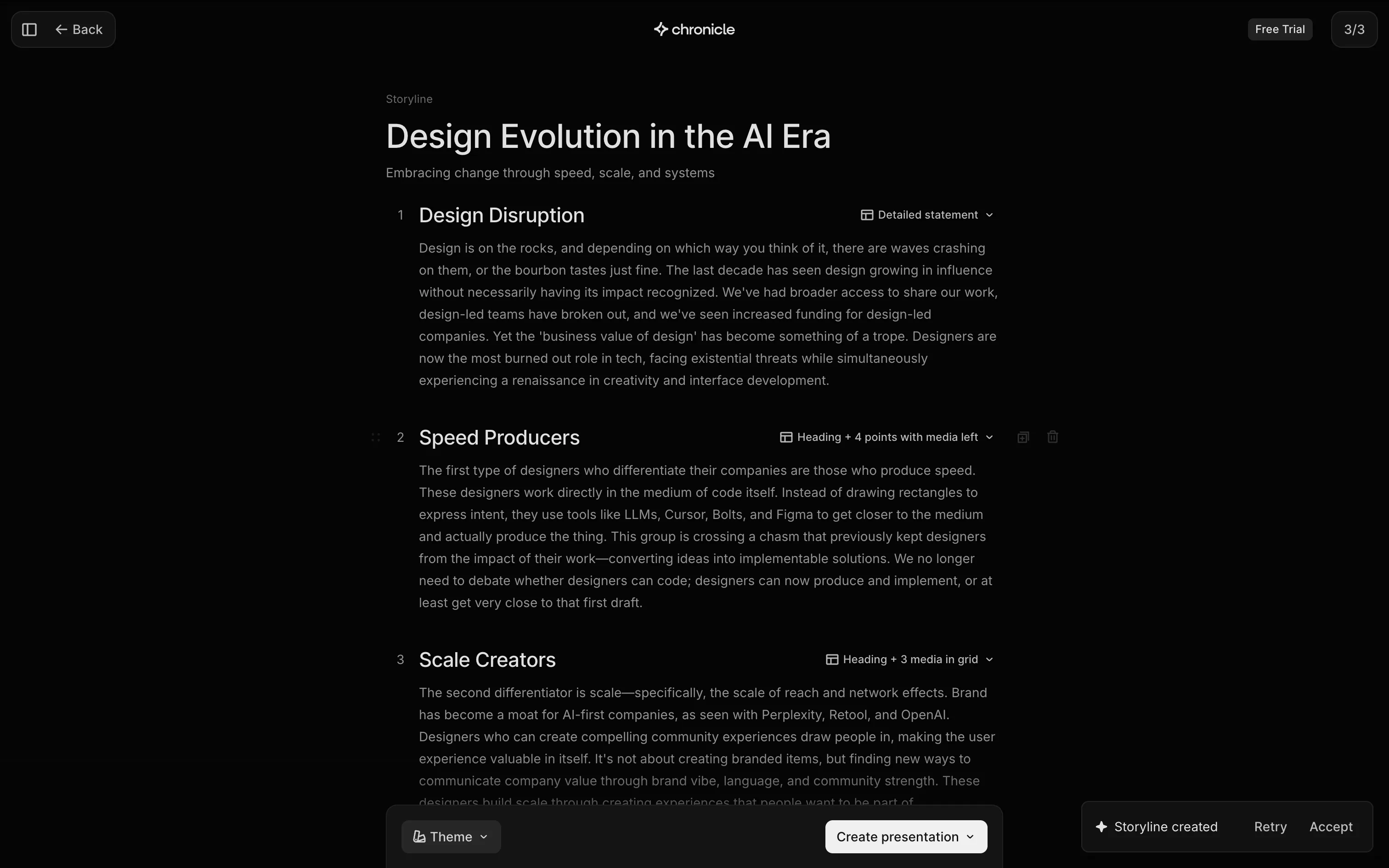

Over time, we will likely see user-led rules in settings panels or agent.md files that provide instructions about when to stop during unforeseen loops, similar to how workflow builders like Zapier and Relay allow these steps to be entered manually. This becomes even more complicated as agents work with each other, where a subagent may require approval from a more established or senior agent. Look to parallels in how teams of humans operate interdependently to explore what this could look like in your domain.