As AI becomes increasingly autonomous, humans require affordances that allow them to monitor it without disrupting its flow, and to intervene if necessary. This shared vision allows them to observe and orchestrate the AI passively.

In the phsyical world, Assistants like Alexa and autonomous vehicles have already established the pattern of AI informing users when it is active or when it requires human intervention, generally in the form of color, sound, or lights.

In the digital space, small affordances that keep the user informed of what the AI is seeing and doing have a long history as well, from spinners to more recent “AI is thinking…”-type elements. When the AI is working in sensitive spaces or in a shared context, taking action while the user observes, users need clear affordances into what it's doing so they can intervene if necessary.

Ambient shared vision cues are an important clue to alert users to what the AI is seeing and doing. They are meant to attract attention, show what the AI is seeing, and make it clear that a user can intervene.

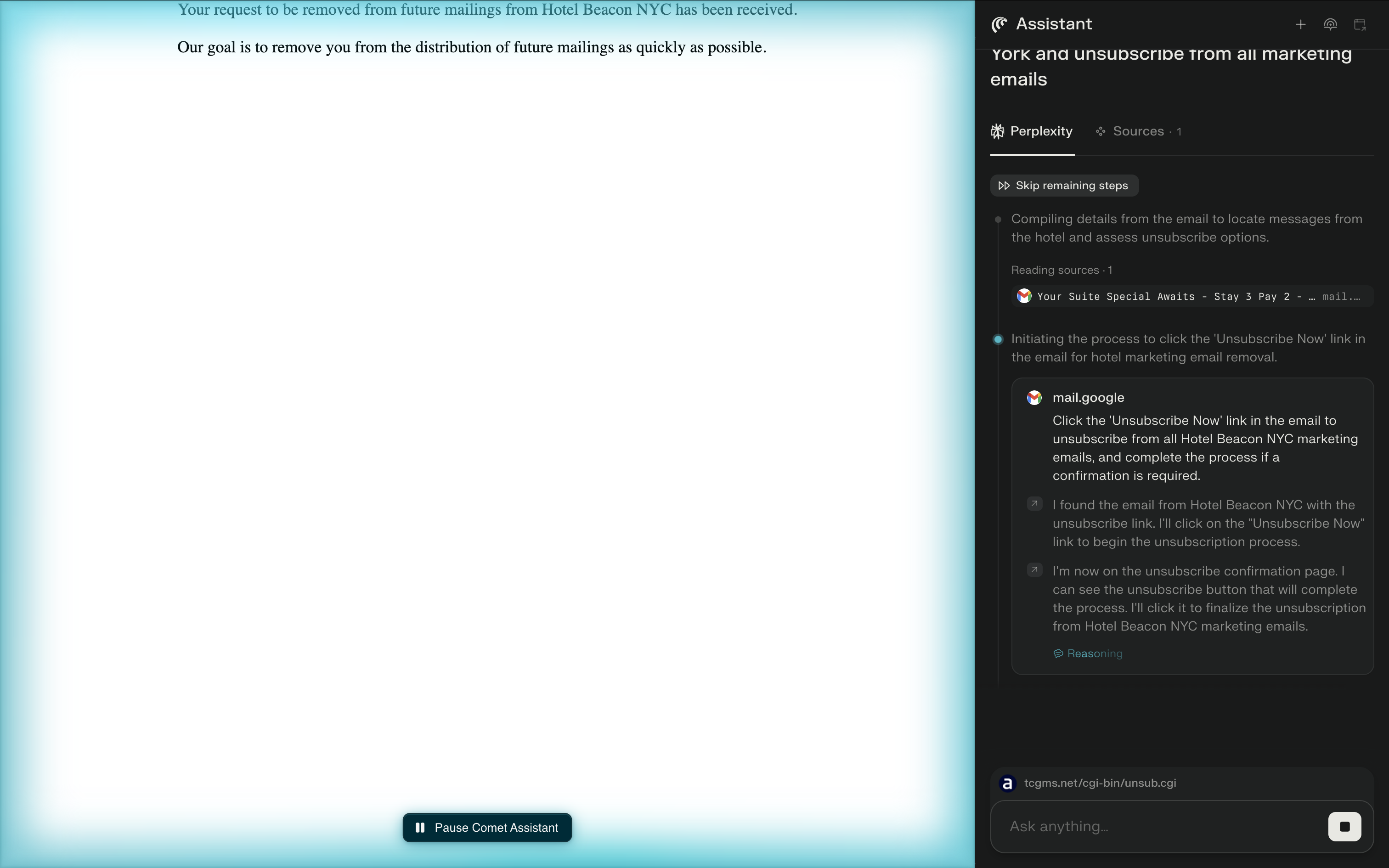

Perplexity’s Comet browser offers a recent example. When the AI is working in a tab, the page where it’s active has a slight inset glow. So far that’s the extent of the affordance, but one might imagine other cues–particularly color–being used to inform the user when they need to intervene, much like the colors on the GM steering wheel.

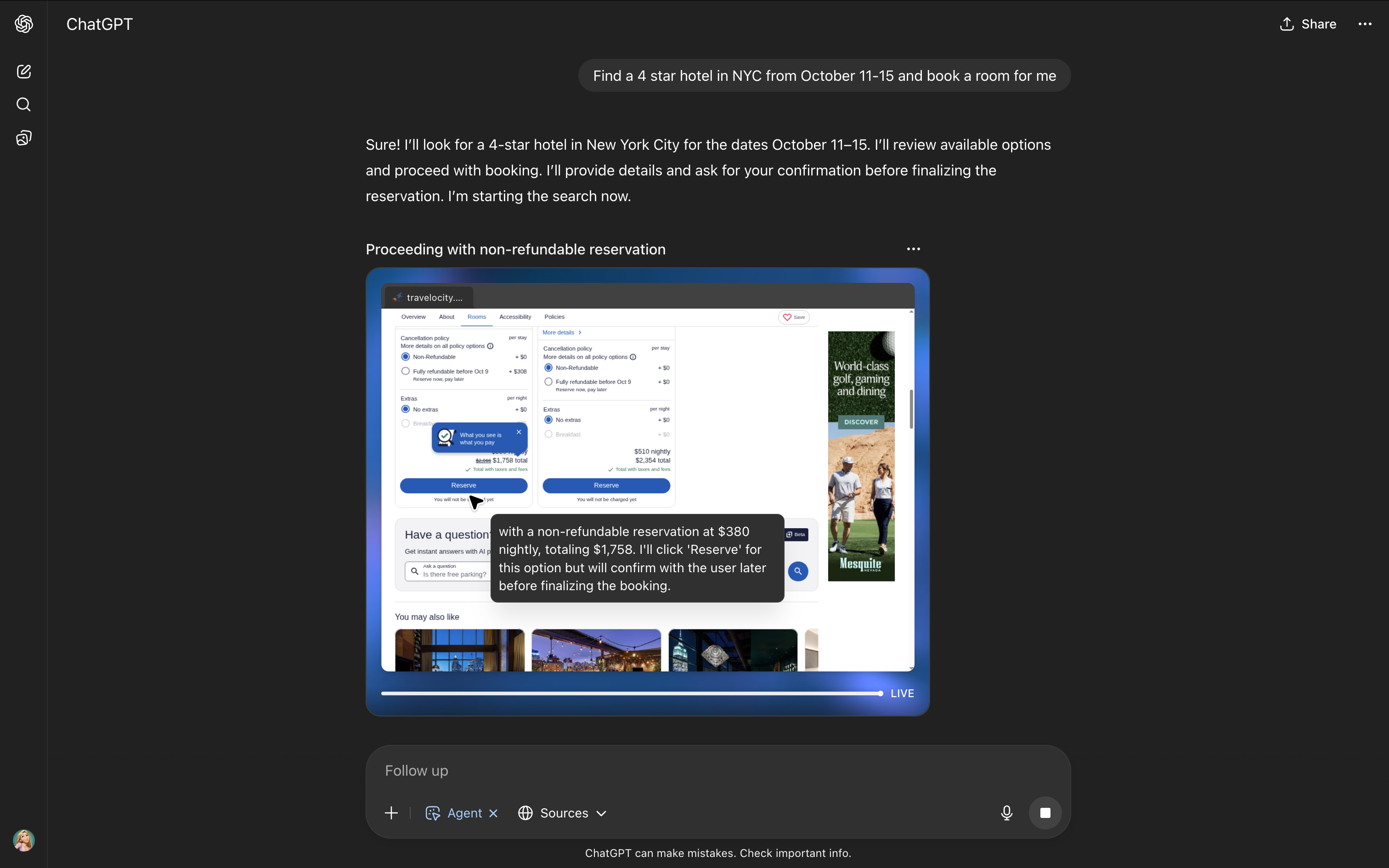

OpenAI's operator mode takes a similar approach and shows the user the browser within the context of the conversation. The AI's activity is updated in real-time, and a ••• menu in the top right includes controls to allow the user to take over.