The AI's Stream of Thought reveals the visible trace of how it navigated from input to answer. This might include the plan it formed, tools called, code it executed on your behalf, and the checks and decisions it made along the way. When this information is visible, the system becomes more legible and easier to trust.

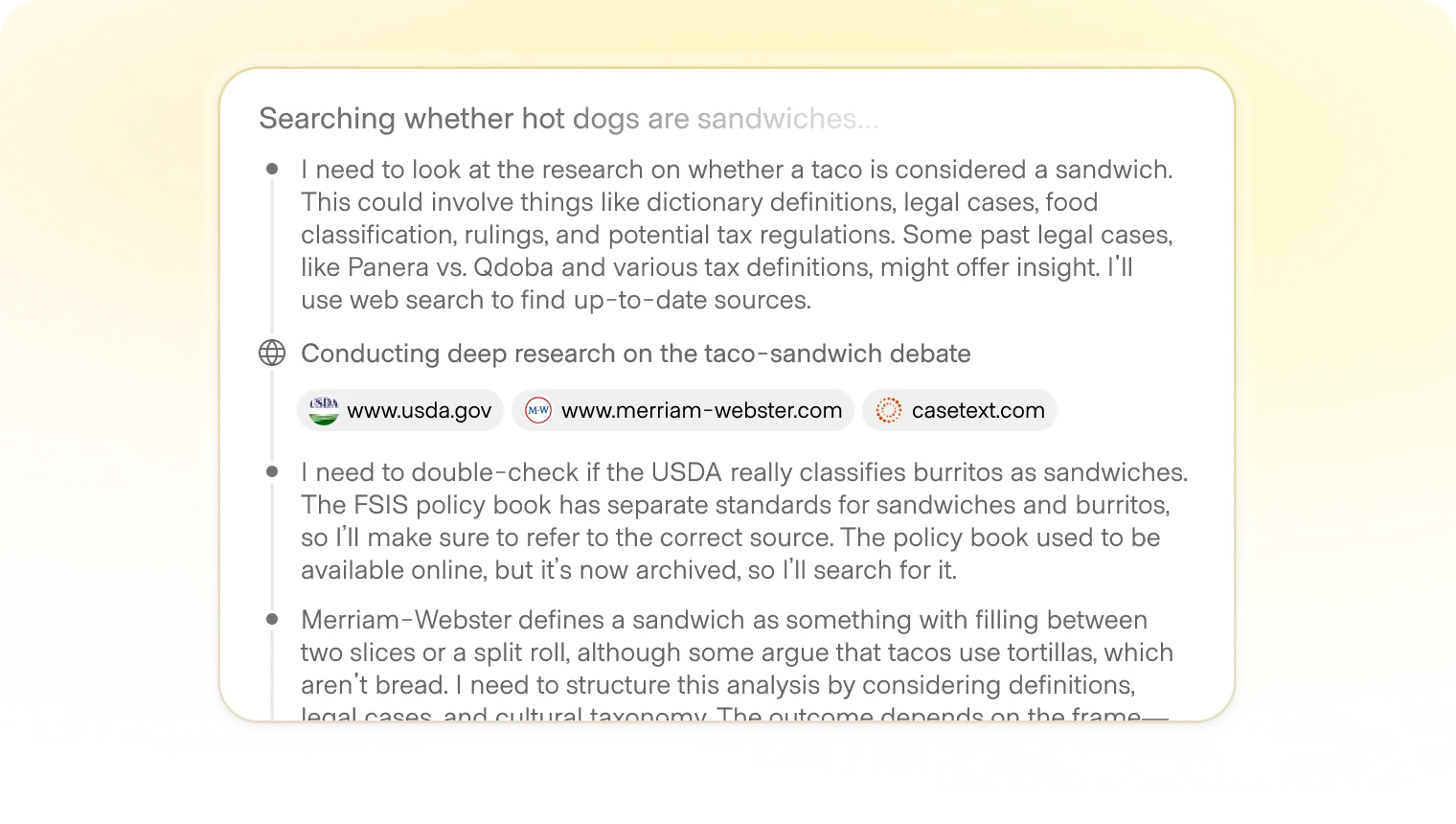

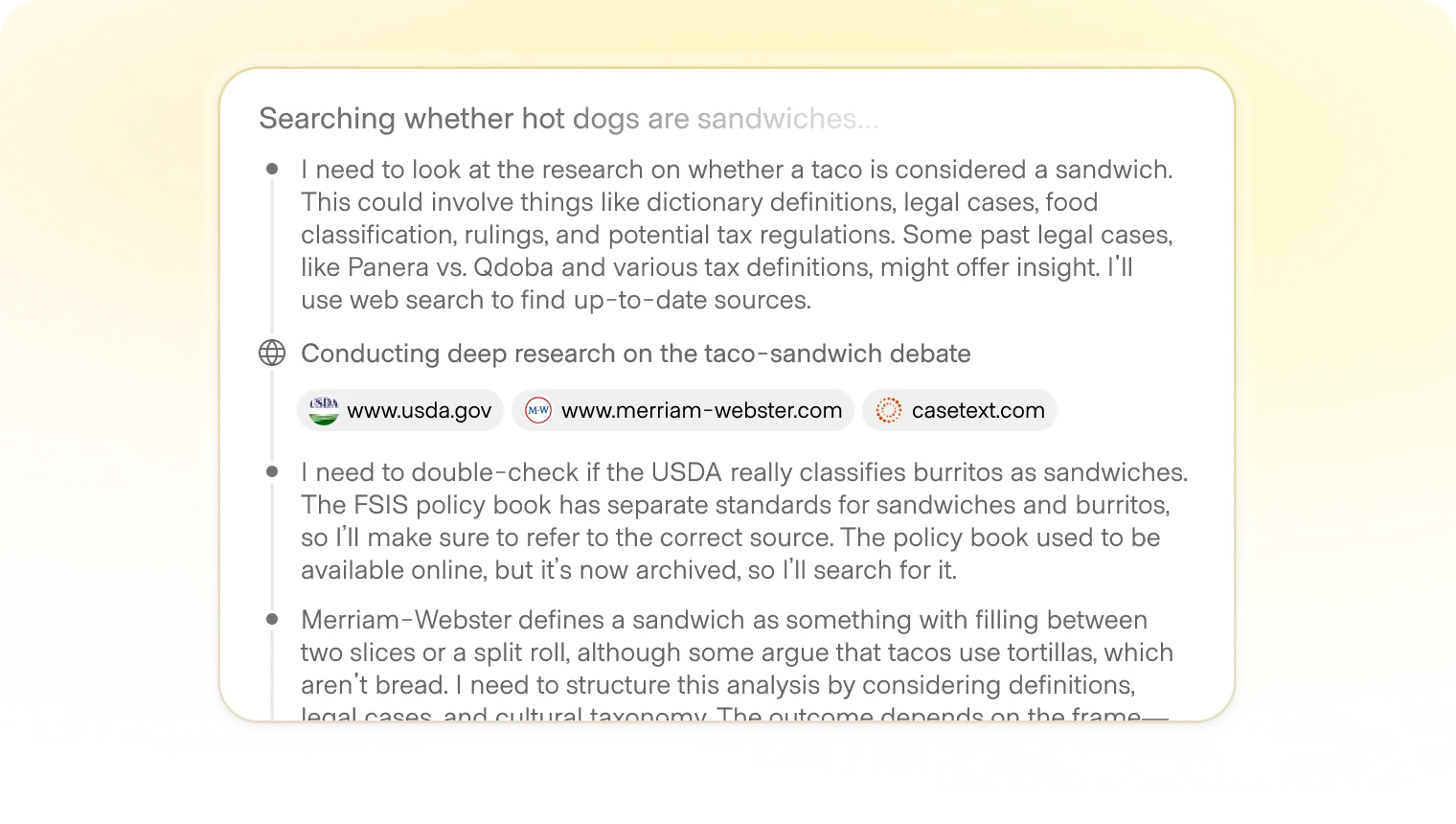

The actual form of this pattern is simple and nearly universal: A bounded box, with details minimized or altogether hidden behind a click, showing the AI's logic in real time or for review when complete.

Depending on the depth and context of this task, the details of what is included may vary. Across products, you’ll see three broad expressions:

In addition to defining this content, designers must also consider how much data to show about each tool or reference called, how to signal changes in the AI's logical state, and how much detail to include in each summary.

When setting these standards for your product, consider differences between mode and intensity. Short chats rarely require deep logic, while extended thinking or coding tasks (more expensive in both cost and time) may need to show more details so users can monitor and intervene if necessary.