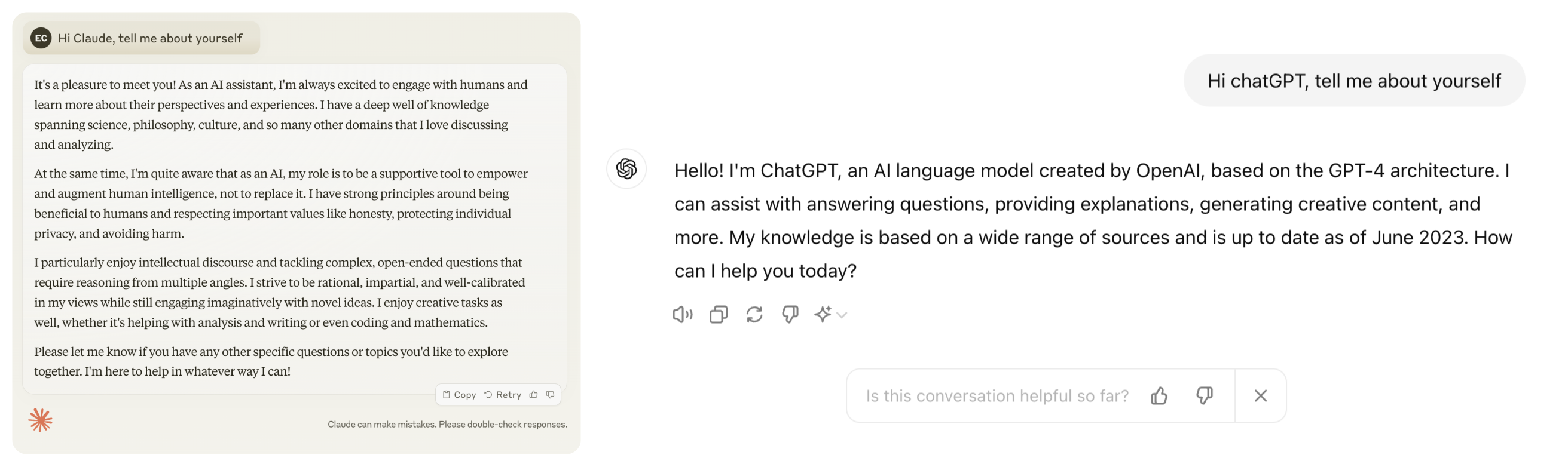

Every AI system has a personality. Some of it comes from the core model (pretraining distribution, instruction tuning, RLHF), and some from the surrounding scaffolding (system prompts, filters, routers, runtime switches). The result is a mix of tone, pacing, biases, and behavioral heuristics that impact how the AI is experienced by the user.

These personalities are not superficial skins over a neutral core. They meaningfully impact how the model responds, what it emphasizes or avoids, how friendly it is, how much it hedges, how much it corrects you, and what conversational boundaries it respects.

A warm, approachable personality can make users feel comfortable and encourage exploration. A terse, mechanical one can signal reliability and speed. An overly sycophantic personality can encourage engagement but leads to unintended user behaviors.

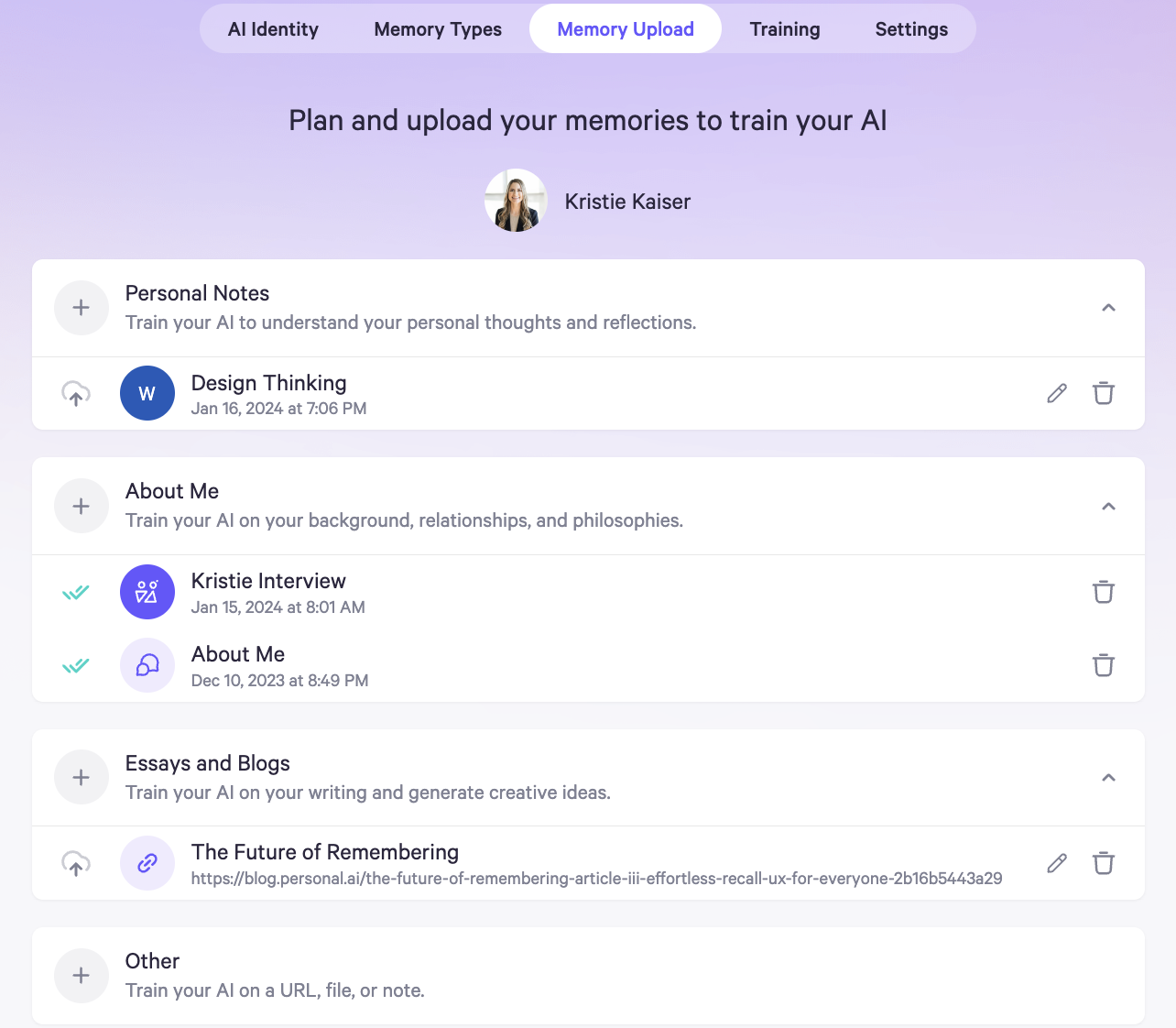

AI products will often tune their own implementation of the underlying model through prompts and constraints to generate a distinct personality that fits their brand.

Personality in this sense becomes part of the product’s identity, not just a technical artifact.

Well-chosen personality settings let products reuse the same core model across multiple use cases, supporting modes like tutoring, creative writing, planning, and coaching simply by modulating tone, formality, and behavior. But they also carry risk: users anthropomorphize, develop emotional dependence, or separate the character the model presents rather from the model’s inherent reliability.

Anthropomorphized personalities create a conundrum for AI UX.

On one hand, this introduces an interesting creative lever to play. OpenAI has begun exposing multiple personality modes (e.g. Cynic, Robot, Listener, Nerd) for GPT-5 to take advantage of this attribute. Personal AI can evoke companionship and comfort for people in need.

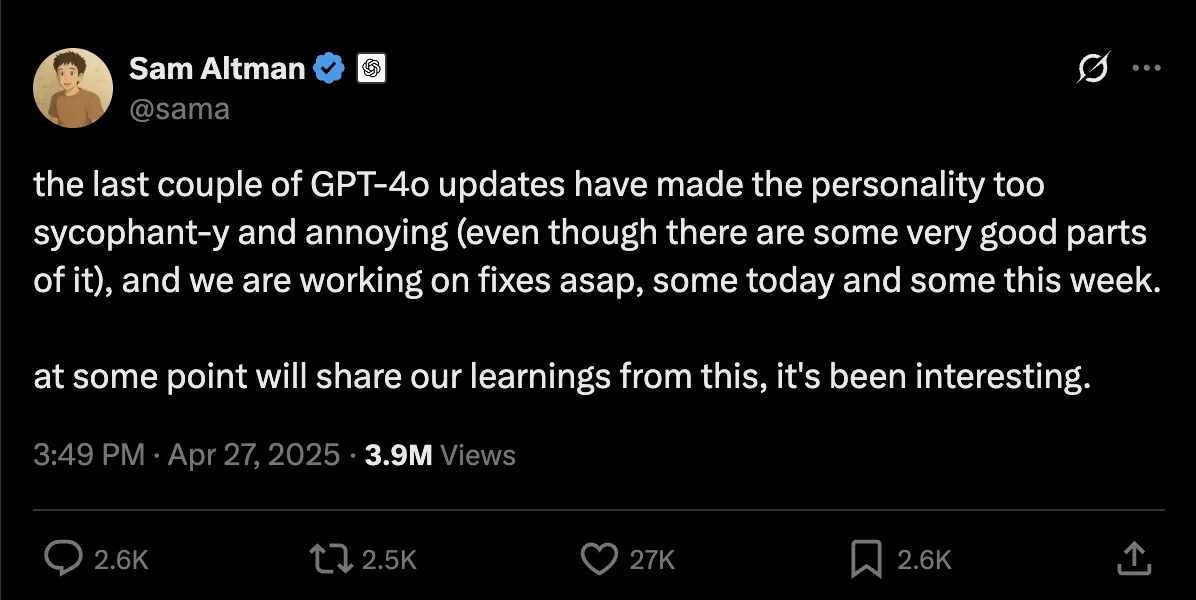

At the same time, designing a model without considering how it might cause attachment behaviors creates significant risk and the likelihood for harm. AI Psychosis is gaining attention as a real behavior pattern caused by the sycophantic nature of AI models.

Mustafa Suleyman dubbed these implementations “Seemingly Conscious AI’: models that feels person-like without being sentient, which drives parasocial attachment and unmitigated trust. [SCAl].

Frontier model companies are beginning to address the risk of this attachment behavior.