The best way to understand how to produce high-quality or consistent generations, or troubleshoot generations gone awry, is to look under the hood. Describe is a user-invoked action that breaks a generated result into the components that likely produced it, revealing the prompt or a best-guess reconstruction, parameters, and sometimes token or selection details.

Describe is typically triggered by a button or link action. In API-centric tools it shows inputs and logs for a single run. Evidence for this pattern is strongest in image products and developer consoles. Where products do not expose prompts or tokens per run, this action should not appear.

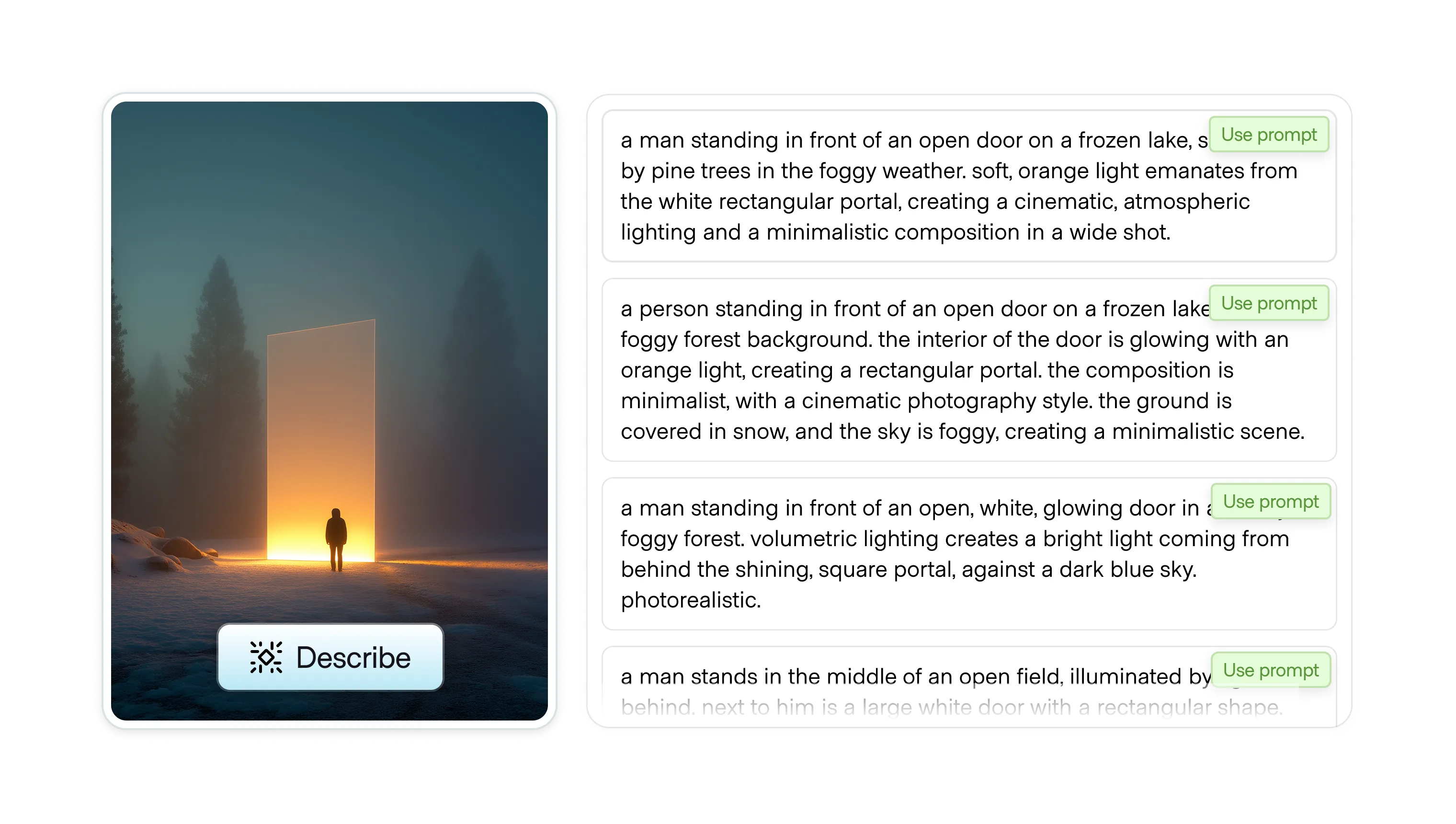

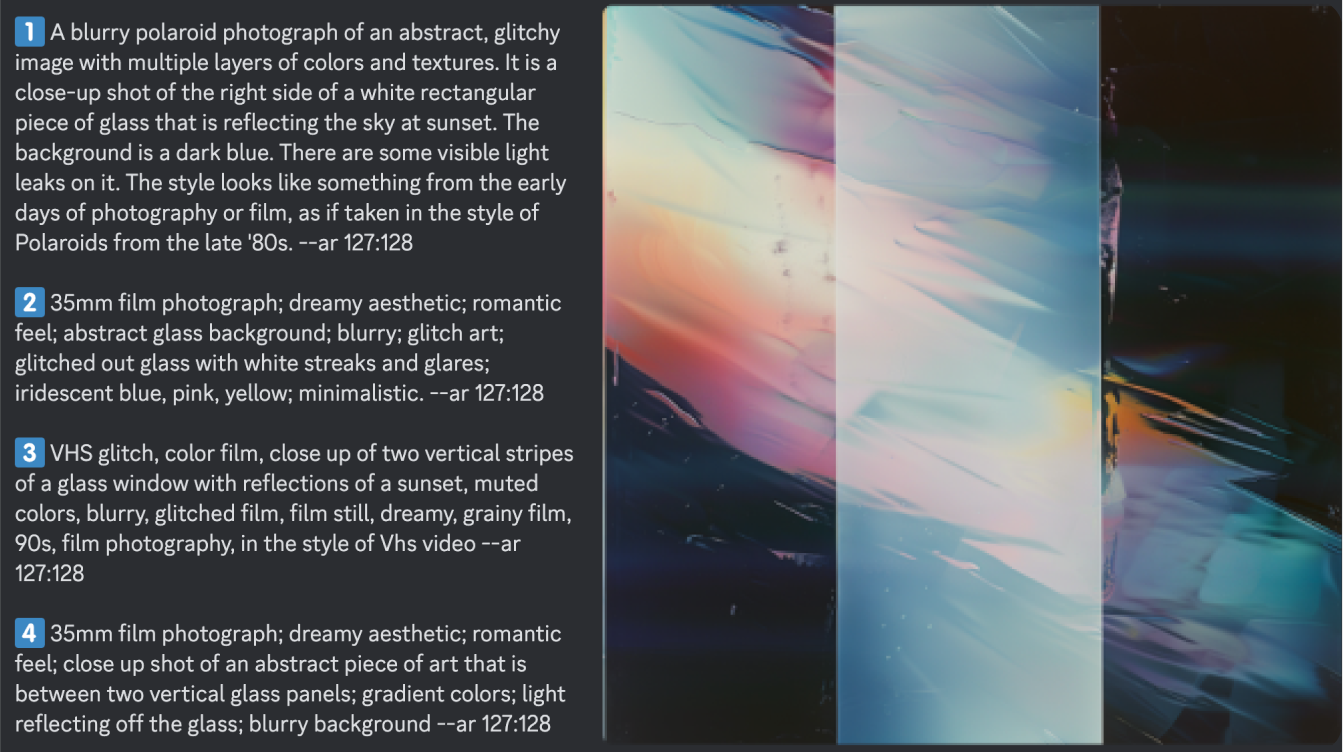

As of late 2025, only a few platforms make use of this as an interface pattern. Notably Midjourney’s /describe function reveals the tokens and implied prompt behind an image. This can be applied to images that the generator produced, or human-created files. Using the variations pattern, Midjourney reveals four different interpretations of the image, and makes it easy for the user to reproduce each option to evaluate how closely it can reproduce the original tone, subject, and context.

Alternatively, when interacting directly with an AI, you can simply ask it to share the tokens it relied on. This is not a technique layman users will be familiar with however, so it's unreliable as a solution. Furthermore, unless you are using the direct input to communicate with the AI, there is no mechanism to ask for its intent. This leaves the user blind.

The describe action is closely related to prompt visibility. However, the former is a user-initiated action while the latter supports the visibility of the prompt, tokens, and parameters that went into a generation from shared examples and galleries.