A picture is worth a thousand words, or in this case, tokens.

When existing content is used as the basis for further generation, its underlying tokens can operate as a prompt in of itself. Using prompt layering or references, users can build multiple iterations off of a single input, remixing it countless times until they find what they are seeking.

Remixing is a broad category of actions that change the structural form of an existing piece of content while retaining its modality. If you intend to change the modality itself (e.g., convert a text file to a presentation), use transforming patterns. If you only want to change the style of the content (tone, mood, etc) but leave everything below the surface layer in tact, using restyling.

Sample forms of remixing include:

- Adjust the lead or thesis of an essay

- Swap POV or audience

- Change narrative pattern: essay to Q&A, debate, case study, FAQ

- Add new visual tokens or a new reference to an image

- Condense a long video into a collection of clips for quick review

Pairing remixing with inline actions

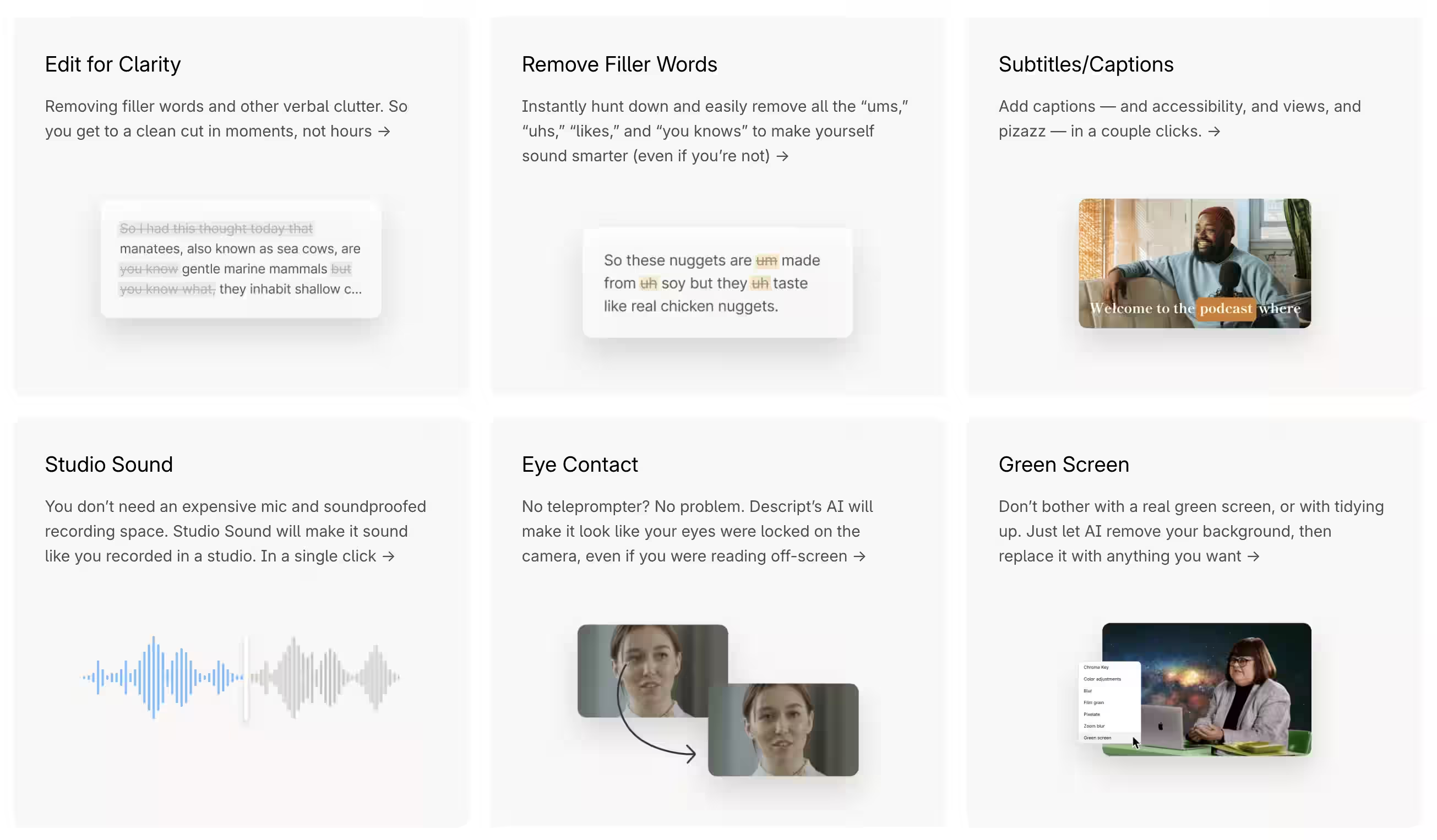

People struggle to write effective prompts–at this point, we should simply take this as true, and look for ways to allows users to communicate the maximum amount of information without having to prompt engineer. Pre-set remixing actions allow users to work with AI to improve a piece of content with a specific intent, on demand. For example, Descript supports remix actions like "remove filler words." Users don't have to define what these words are in order to run the action. The system simple knows, and acts intelligently on their behalf.

How to design a remixing flow

- Let users attach multiple references. Consider allowing them to adjust the weight of those references (see: Midjourney's Omni reference approach)

- Start from an existing piece of content or let users upload/construct something new

- Consider using token transparency so users know what they are working with (see: FloraFauna's "enhance prompt" feature to show the tokens behind an image or video in the input card)

- Use previews to show where the remixed output is coming along so users can stop the generation or submit a new adjustment without waiting for the final result

- Deploy variations with intensity sliders, which creates multiple branches for users to select from and work with

- Allow remixing in place via inpainting if only parts of the content are being adjusted. This way users can target specific areas of the canvas without recreating the entire composition

- For code (or other users) show a structured diff: what moved, what was added, what stance changed.

- Consider forcing users to verify the change if the remix occurred via inpainting vs. generating new variations

- Let users promote a remix to a new working draft and start a new branch

- Use footprints to let users trace back through the results of remixing to find the original source