Workflows combine multiple actions into a repeatable, predictable process. They appear in AI experiences in two forms:

As agent capabilities grow, the use of experiences will shift towards the latter option, supporting workflows that the agent itself generates itself in order to explain what it did or plans to do. As this happens, interfaces will need to take on the role of being and inspection surface in addition to a construciton tool.

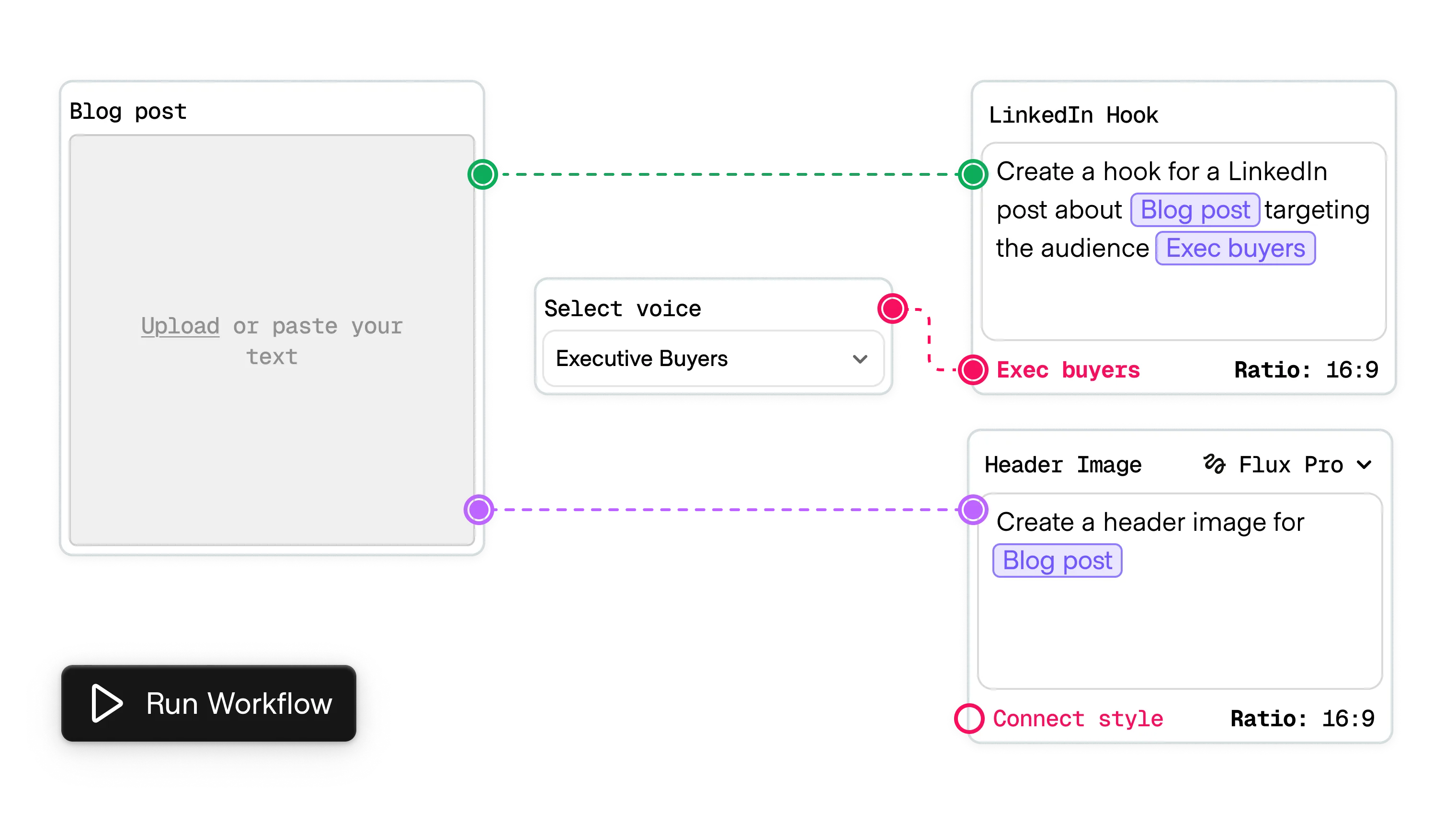

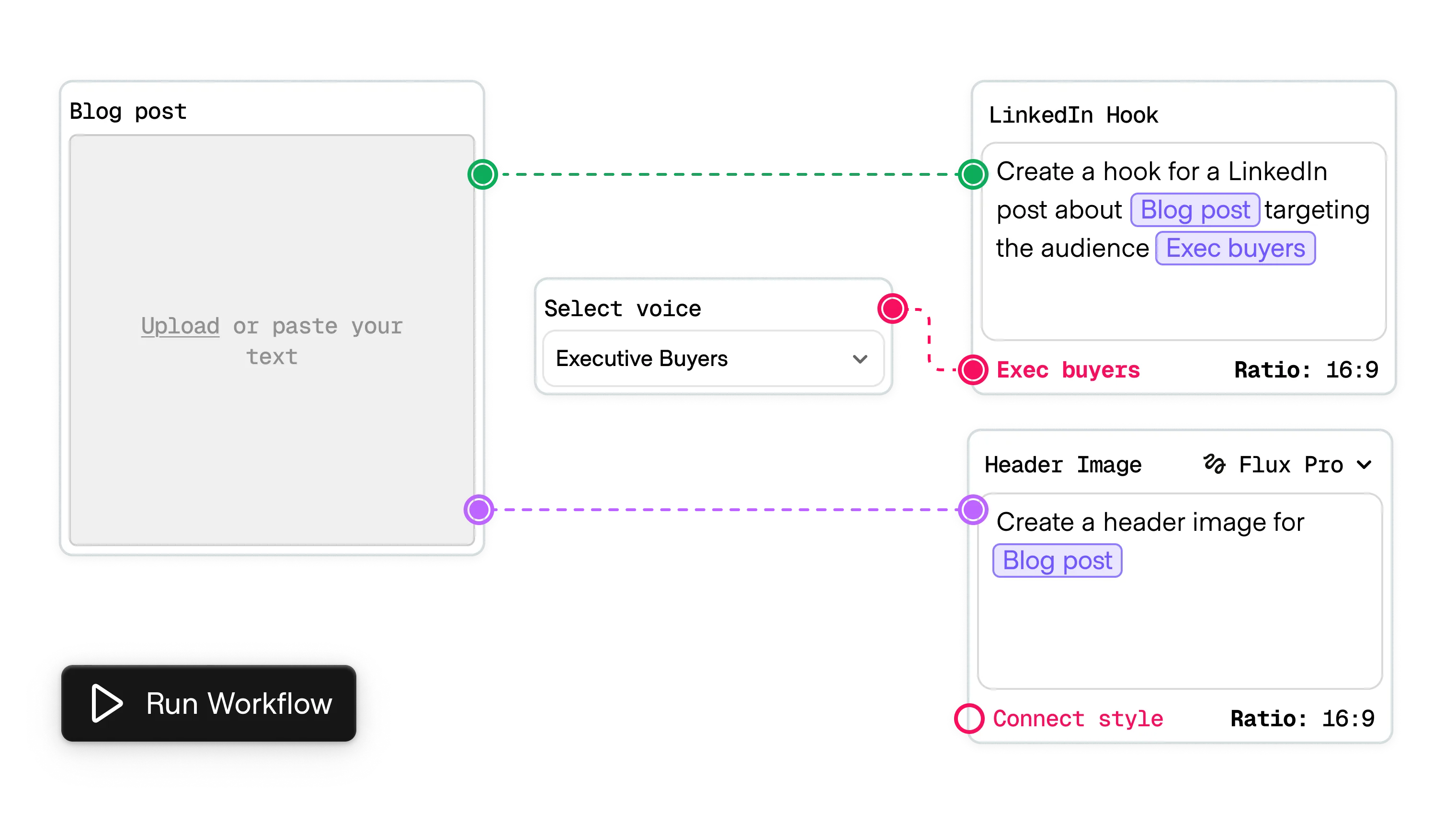

Unlike ad hoc prompt chaining, workflows are constructed in advance, using set instructions and variables that allow them to run with consistent outcomes over and over again. This consistency makes them a powerful tool for teams who need reliable results across repeated tasks.

Workflows reduce fragmentation. Instead of team A prompting AI to synthesize content in one way and team B writing their own prompts for a similar task, a workflow lets a single owner define the steps for everyone. Those workflows can then be reused, adapted, or templatized across an organization.

Their use also improves control over how data moves across systems. Prompts can be designed to pull information from third-party tools, transform it, and then pass it to the next stage in the sequence. This creates a centralized orchestration layer for retrieval, summarization, and structured responses.

Besides for standard steps, workflows should support multiple AI-powered actions that derive best practices from their respective patterns. For example:

In a shared surface, users need clear boundaries: what was specified by them versus what was improvised by the agent. Without that distinction, trust erodes because people don’t know which parts they control.

AI-powered workflows must consider views for oversight, checkpoints, and redirection. In practice, this means workflow UIs will need dual modes: “authoring mode” where humans define repeatable flows, and “reporting mode” where agents surface their own logic for users to review or override.

In this context, workflow design must consider affordances and constraints beyond its component steps.

Strong system rules helps ensure workflows run seamless, even as the AI adjusts its prompting and logic on the fly.